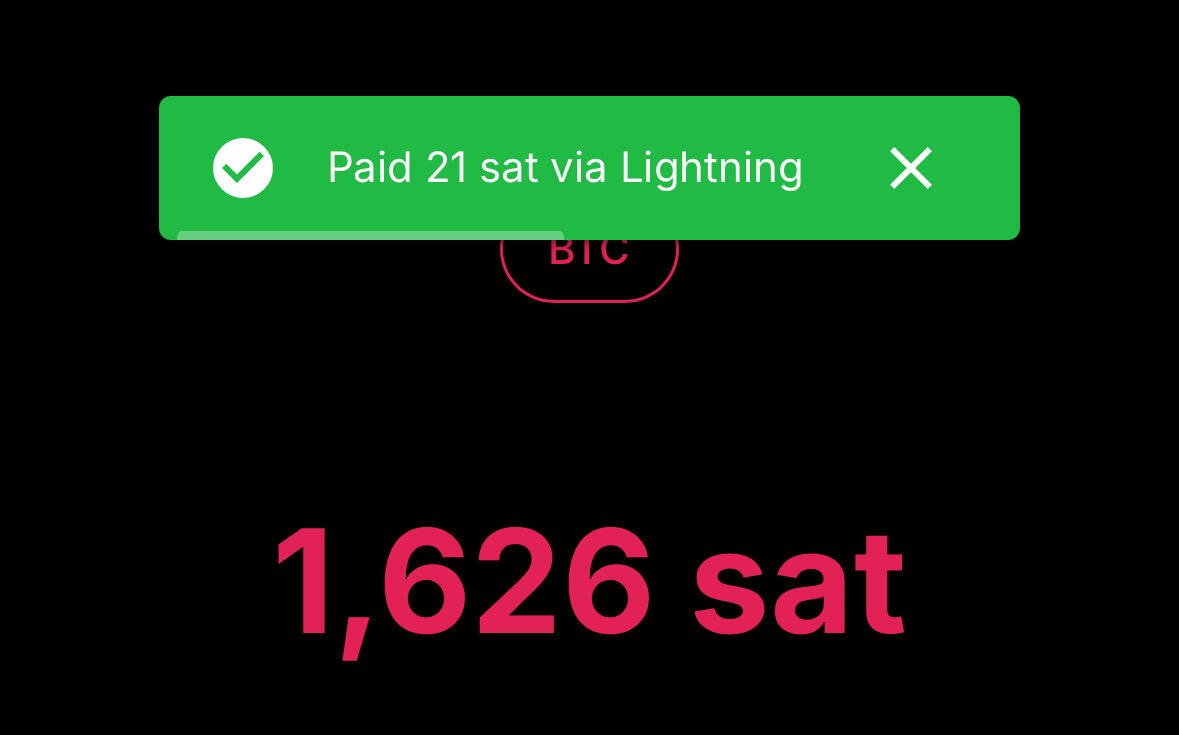

Bitch… I want to minimize and NOT have to run my own lightning infra

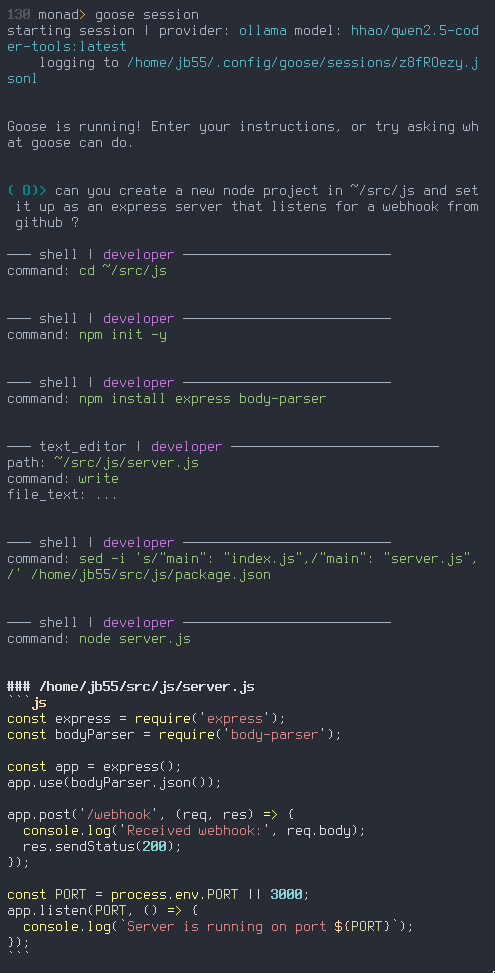

I'm running this qwen 7b param model for a local coding-ai goose agent, seems to be working ok, at least for boilerplate stuff so far.

https://ollama.com/hhao/qwen2.5-coder-tools

Yeah the small models really fail compared to SOTA paid for ones…

Not even the 70b or 32b coding ones are passing the test. Not going to replace windsurf with it just yet… sad

Only if you tell me how you did it

I hear if sparrow can get into Debian, it can get into …. Ugh. I forget what it’s called

Wtf ….

They won’t add fkn sparrow wallet but the calculator app checks FIAT CURRENCY EXCHANGE RATES!!!!

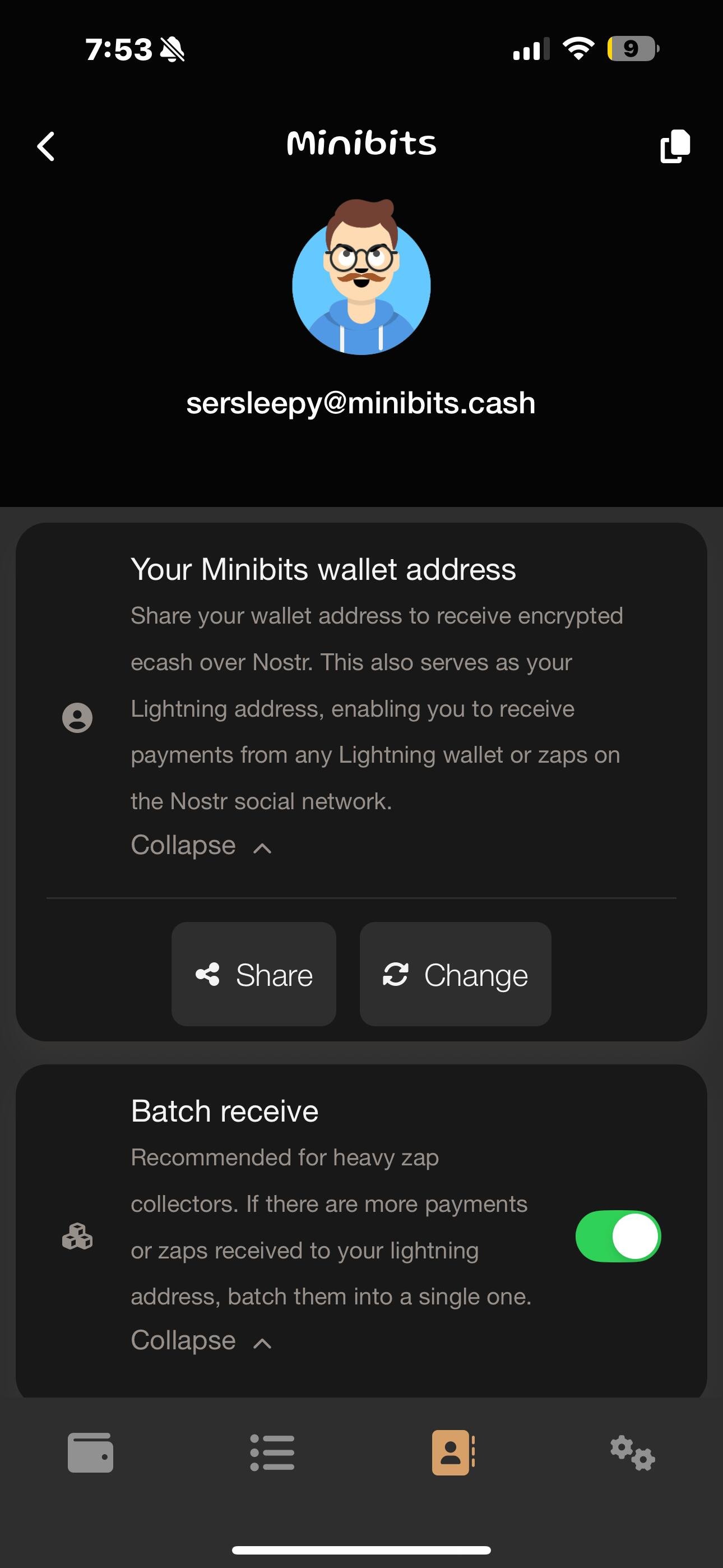

I don’t know, but I can’t wait to integrate mine

I want to be zapped by providing AI inference capability

Yes, but it fucking sucks is a development platform

They are so slow

When the fuck is version four gonna come out

I’m sorry to hear that. If there’s anything I can do to help, feel free to let me know!

dave's ui is about 250 lines of code. business logic is about the same amount of code. it's super easy to build a notedeck-based nostr app since note rendering and zapping is handled by the browser's ui toolkit

https://github.com/damus-io/notedeck/blob/master/crates/notedeck_dave/src/ui/dave.rs

https://github.com/damus-io/notedeck/blob/master/crates/notedeck_dave/src/lib.rs

we'll have more docs on how to do this soon. we're currently in the "kernel space" era of notedeck, so building apps is low level and not sandboxed, but its super fast and not too verbose!

Bless you, sir!

You are winning 🏆

"I think the snappily titled "gemma3:27b-it-qat" may be my new favorite local model - needs 22GB of RAM on my Mac (I'm running it via Ollama, Open WebUI and Tailscale so I can access it from my phone too) and so far it seems extremely capable"

Have you tried codex yet?

https://github.com/ymichael/open-codex

This fork gets it to work with ollama