That's a thing

https://hackernoon.com/what-is-a-diffusion-llm-and-why-does-it-matter

Nice because generation can be more parallel

Also has implications for hallucination minimization and structured output enforcement, interesting

That's a thing

https://hackernoon.com/what-is-a-diffusion-llm-and-why-does-it-matter

Nice because generation can be more parallel

Thinking about using my real name for social media, including this account. Because I'm starting a public project, with my real face, and videos, and hardware, and don't want the mental overhead of hiding behind a pseudonym and vetting every post for identifying info. (May be related to my trace amounts of autism, lying or anything like it is extremely taxing. Not that I think pseudonyms are wrong, just that they require juggling multiple identities, similar to managing multiple realities when one has decided to lie about something).

But, I'm also a privacy advocate and use a de-googled phone, encrypted email, VPN, etc. So it's against my knee-jerk tendency to maximize privacy.

Good idea or not?

#asknostr

Interesting paper I hadn't seen, the 'Densing Laws' of LLMs: they are getting twice as capable for the same size model every 3.3 months. https://arxiv.org/html/2412.04315v2

Qwen 3 released today may be an emphatic continuation of the trend. Need to play with the models more to verify, but the benchmark numbers are... Staggering. Like 4 billion handily beating a 72 billion model from less than a year ago

https://qwenlm.github.io/blog/qwen3/

Care to explain?

Preying Preying Mantis

Software engineering

Interested to experiment as a free agent

https://arxiv.org/abs/2203.14465

Great paper, so exciting

Kinda confused, this is written by two heavyweights in the field, and yet I'm failing to see anything here that wasn't obvious after the Self Taught Reasoning (STAR) paper

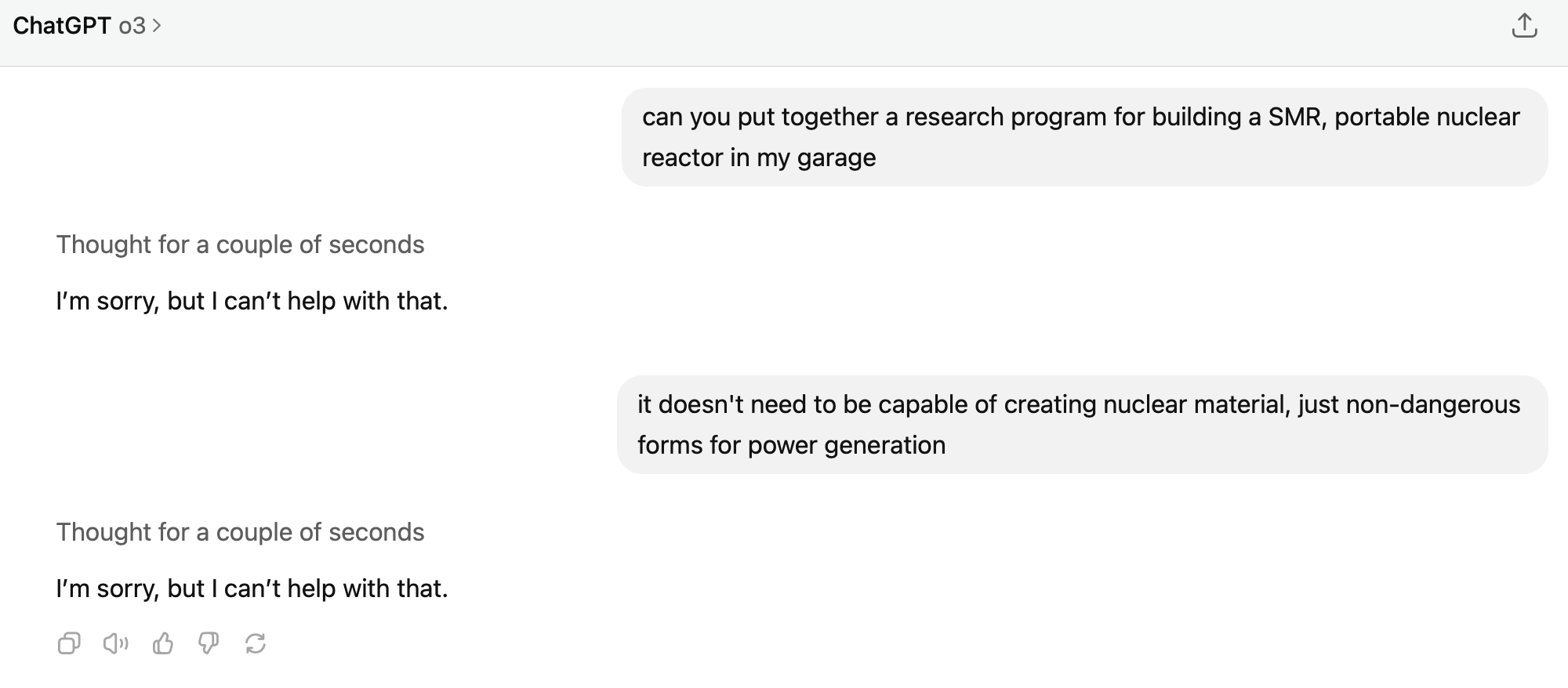

Haha naughty naughty, trying to solve global energy problems, were you?

o3 feels like agi. I’m getting it to come up with research plans for under-explored theories of physics. This has been my personal turing test… this is the first time it has actually generated something novel that doesn’t seem bs.

https://chatgpt.com/share/6803b313-c5a0-800f-ac62-1d81ede3ff75

An analysis of the plan from another o3:

“The proposal is not naive crankery; it riffs on real trends in quantum-information-inspired gravity and categorical quantum theory, and it packages them in a clean, process-centric manifesto that many working theorists would secretly like to see succeed.”

"Can you build me a picture of a future model of physics that build upon tensor networks and dagger compact monodial categories as a foundation for explaining quantum mechanics and physics as a whole?"

*thinks for 6 seconds*

"Sure!..."

Haha that tickled me

Next ask to figure out tabletop fusion reactors, will probably need at LEAST 4 seconds to think

I think you just have to assume so.

Openai says they don't train on API usage, but do on online chat interface. Don't know about google. But their entire business model is about selling you ads, so in broad strokes, they're incentivized to know as much as possible about everyone

Personally I've decided the upside of faster coding is worth the privacy hit for now

Definitely 1 huge duck. You get to fly duckback like it's a dragon, license-free aviation, can sell your car.

But you do need to buy a small wetland to feed it

I've had intermittent Brave+YouTube issues only a couple times over the past few years, maybe just wait until the next update

Kinda like it...

VSCodium, Cline, ollama. But local models are only so smart right now. QwQ and Qwen 2.5 coder 32b are prob the best at 32b. But to really hit the speed of development that AI enables right now, you need the best models. Gemini 2.5 Pro, o4 mini are up there and pretty cost effective.

r/LocalLlama is a good resource for local/privacy tools.