Don't forget all the mass control laws like the baliza 16, like tagging a pet to know where you are at all times.

No, I do not believe we will ever attain large-scale, fault-tolerant quantum computers that deliver practical, exponential speedup on useful problems (e.g., breaking RSA-2048, simulating arbitrary molecules, or general optimization beyond what classical supercomputers + heuristics can do).Here is why I lean toward the skeptical side:The error-correction overhead is probably fatal in practice

1. The best physical two-qubit gate fidelities in 2025 are still around 99.9 % (superconducting) to 99.99 % (trapped ions). To get one logical qubit with error rate ~10⁻¹⁵ (needed for Shor’s algorithm on 2048-bit RSA) you need roughly 1,000–10,000 physical qubits per logical qubit under today’s best codes, and millions of physical qubits in total. Every doubling of logical-qubit count still multiplies the physical-qubit requirement by roughly 10× or more. There is no credible path from today’s ~100-qubit noisy devices to the required 10⁷–10⁹ near-perfect physical qubits without breakthroughs that violate known physics.

2. Decoherence and correlated errors are not just engineering problems

Real systems have 1/f noise, cosmic-ray events, thermal fluctuations, and control crosstalk that produce strongly correlated errors across many qubits. Most theoretical fault-tolerance proofs assume independent, local, Markovian noise—conditions that are provably violated in every physical platform. When physicists model realistic correlated noise, the error-correction threshold collapses or disappears entirely ( Alicia & Kalai 2018–2024 papers, and many follow-ups).

3. The “exponentially small amplitudes” problem

To factor a 2048-bit number with Shor’s algorithm, the quantum Fourier transform must resolve probability amplitudes on the order of 2⁻⁴⁰⁰⁰. That is smaller than one part in 10¹²⁰⁰. No physical instrument can distinguish a signal that small from zero, and no error-correction code can protect amplitudes that are smaller than the natural noise floor of the universe (thermal fluctuations, gravity-wave background, etc.). This is not a temporary engineering limit; it is a hard limit from basic quantum metrology and the holographic principle.

4. History of analog computing

Every previous attempt to build scalable computers that rely on precise continuous parameters (analog computers in the 1940s–60s, optical computers in the 1980s–90s) eventually failed for exactly the same reason: noise and precision requirements scale exponentially with problem size. Quantum computers are the ultimate analog computers, and we have no example in the history of technology of an analog paradigm overtaking digital at scale.

5. What we will get instead

We already have, and will continue to improve, noisy intermediate-scale quantum (NISQ) devices and quantum-inspired classical algorithms (tensor networks, QAOA-like heuristics, etc.) that give modest speedups on some chemistry and optimization problems—maybe 10–1000× in narrow cases. That is useful, but it is not the revolutionary exponential quantum advantage that was promised.

In short: small quantum sensors, quantum networks, and specialized quantum simulators will become routine. But the dream of a universal, fault-tolerant quantum computer that obsoletes classical cryptography and simulation is, in my view, physically impossible—not just hard, not just 50 years away, but impossible with the laws of physics as we understand them.This is obviously a minority position in the current funding environment, but it is shared by a non-trivial number of serious physicists and complexity theorists (Gil Kalai, Mikhail Dyakonov, Stephen Wolfram, Leonid Levin, and others). The evidence has only strengthened in the skeptics’ direction since about 2019. - Grok

#quantum #btc #cryptography #bitcoin

On this subject, has anyone heard of Peach? (https://peachbitcoin.com/index.html)

#python 3.14 removed the GIL

You can ask for a summary of you chat and use it as the first message on your new chat so you still keep relevant bits but in a smaller compacted size

This is very cool campaign. It makes zero sense for nostr:nprofile1qqsfhcskzx35zsnwj9rz2lz5z70z95tchd75zphzglwl8eg8k7v956cpzemhxue69uhhyetvv9ujumt0wd68ytnsw43z722mwp5 to use a shitcoin for "private" transactions. Absolutely zero. Cashu integration would be much, much better for Signal customers.

SimpleX

What many people don't understand is that Core cannot filter the segwit discount and that datacarrier=0 does not work since version 25.

That's why we use Knots.

https://www.wiz.io/vulnerability-database/cve/cve-2023-50428

How is btc decentraluzed IF we're can only use one client? Torrent clients were all compatible with the torrent protocol, so what's missing on btc?

Honest question.

This 2679 byte op_return containing a png file was confirmed on chain. I broadcast it using Core version 29.1 to the regular Bitcoin relay network (no out of band service needed). Core 30 makes no difference in the ability of a "spammer" to confirm transactions with large op_returns.

Here is the TXID: 0d5f273d09c1a4665634fd25d5a17b879d8843de5edc40b8b7d8671500dd16b6

It took a little while to be mined, I must have gained a new peer that would relay it to a miner. I broadcast it block height 916259 and it was confirmed at 619343.

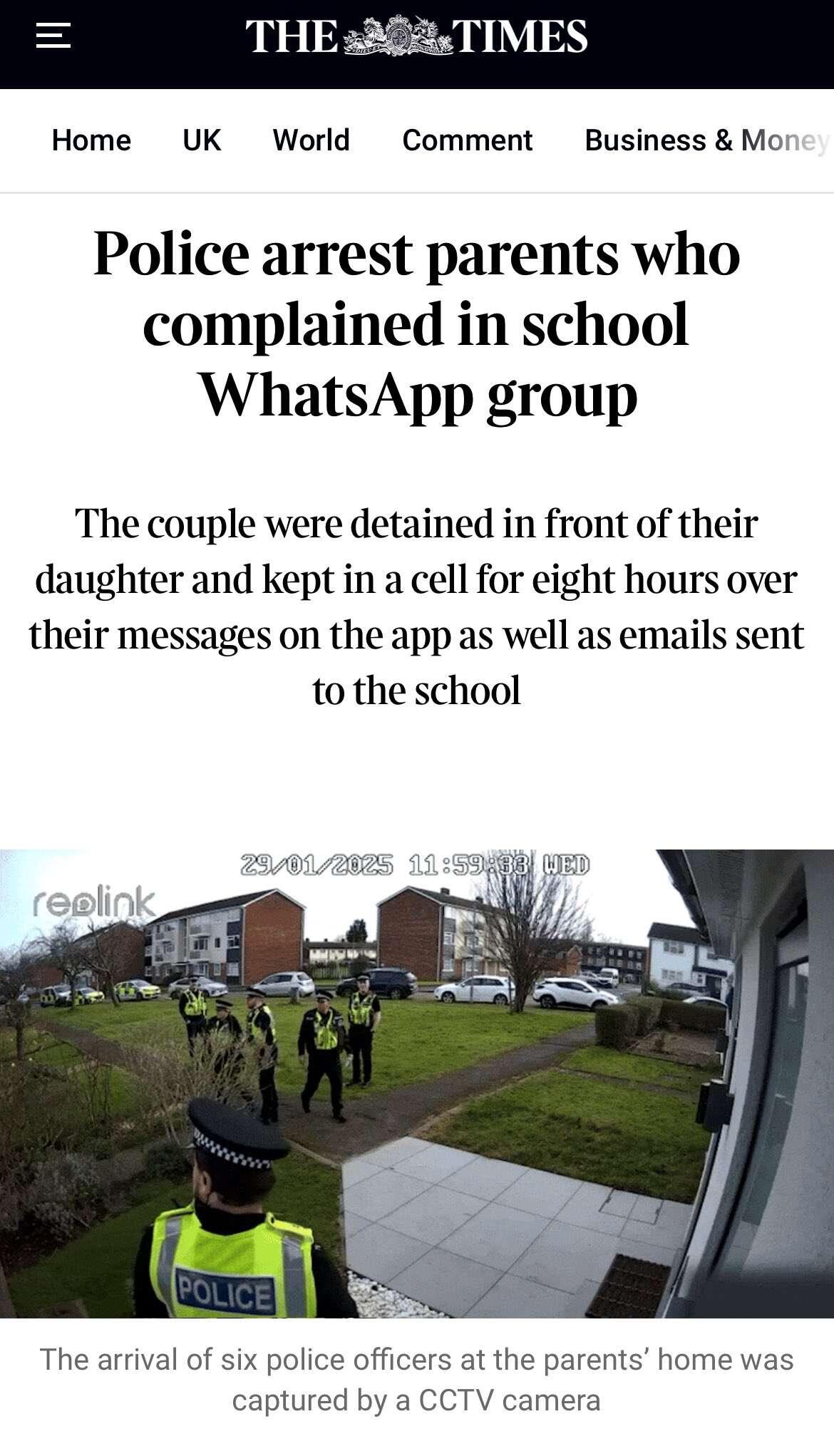

I paid less than 1 Sat/vB. Knots users didn't have this transaction in their mempool but now they store it on their node. Filters won't keep these transactions off your node. Filters do not work.

You can view it for yourself with this command (Thank you nostr:nprofile1qqsdnpcgf3yrjz3fpawj5drq8tny74gn0kd54l7wmrqw4cpsav3z5fgpzemhxue69uhk2er9dchxummnw3ezumrpdejz7qg4waehxw309aex2mrp0yhxgctdw4eju6t09uq3jamnwvaz7tmjv4kxz7fwwdhx7un59eek7cmfv9kz74rw7n6):

bitcoin-cli getrawtransaction 0d5f273d09c1a4665634fd25d5a17b879d8843de5edc40b8b7d8671500dd16b6 true | jq -r '.vout[0].scriptPubKey.asm' | cut -d ' ' -f2 | xxd -r -ps | base64 -d > filters.png

Here are my node settings that allowed me to send this transaction and get it relayed (and mined):

minrelaytxfee=0.00000100

mempoolminfee=0.00000100

incrementalrelayfee=0.00000100

datacarriersize=100000

If you are running Knots because you believe it will prevent "spam", you are being fooled by Mechanic. The only way to keep this type of transaction off your node it to fork #Bitcoin consensus rules.

I en knots cuz I lost trust in core team, no tech arguments matter anymore.

Define #honeypot in one pic #google

I also have qualms about calling a system designed, evolved and run by humans: deterministic.

If you know both great. If you only know one or neither then what? Who do you trust?

I'm ignorant on the subject, why knots, why core, no idea. But after your post I downloaded and installed knots. Guess I'm just an ignorant emotional idiot after all... Good to know for sure.

I don't disagree but where would you out Milei? Assuming his movement gets continuation after he leaves office.

No comments

#spain #outage #apagon