Minimum UTXO size is not a very consequential problem if you don't have to store them in perpetuity even when they're spent.

Why will Monero need ecash? Ultimately what any long term viable cryptocurrency needs is the ability to purge all historical data (everything except the set of unspent outputs), but until then, Monero has adaptive block size, so for now everything is fine.

Does fountain let you use external NIP-46 signing devices for logging in? Because I'm not giving my nsec to a piece of closed source software.

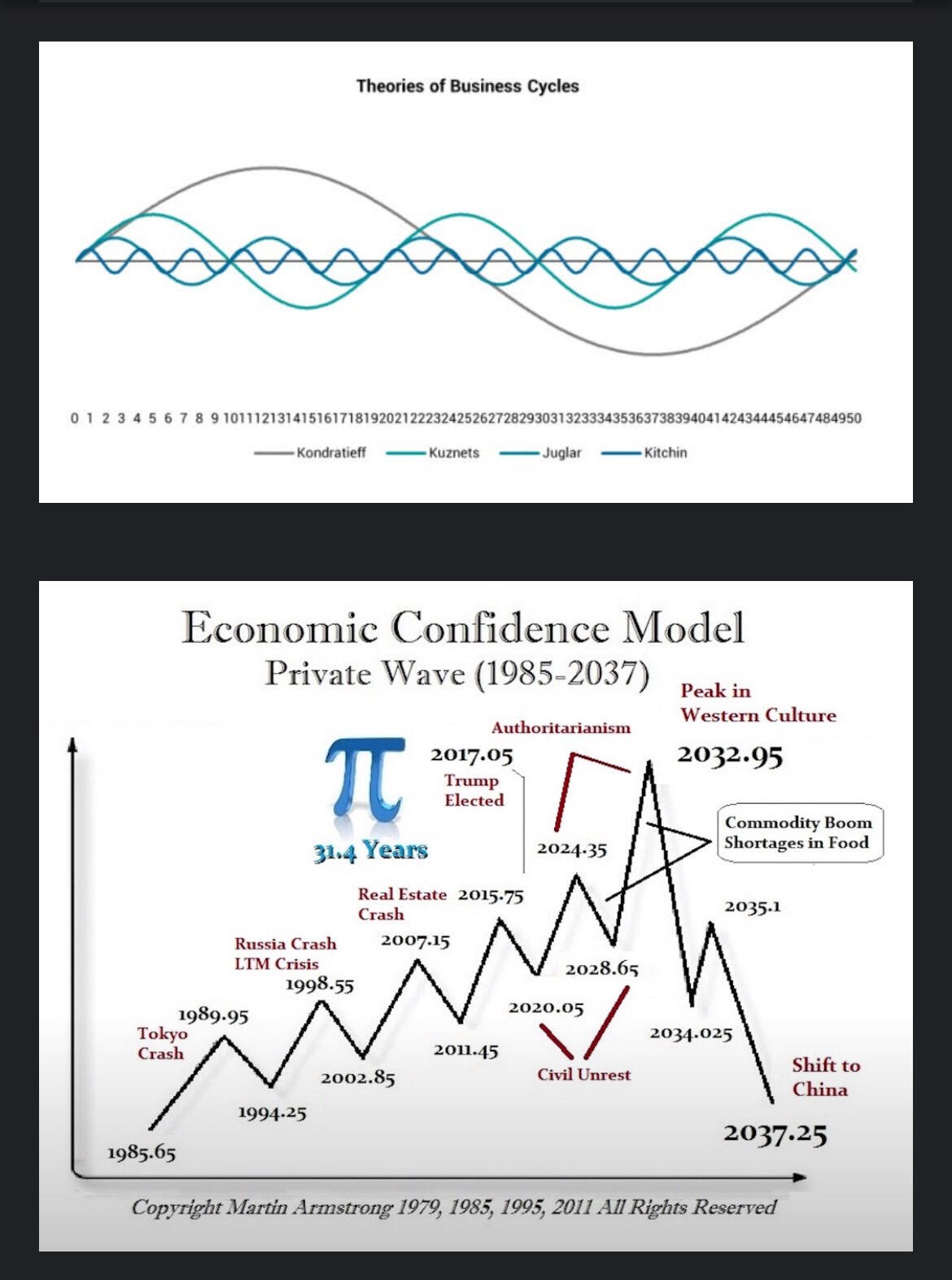

I see the labels on the second image. Where's the causation? What does the model itself tell us? Why is pi there? All I see is a meaningless intographic.

And the first image... I know what the business cycle is. What are the numbers along the graph? What are each of theperiods? They appear to be labelled in another language.

Yeah so subaddresses function like the wallets in BIP39 seeds, where they're deterministically generated from a seed key and that seed key is your private key. For a wallet to know about a transaction sent to one it has to know it, and usually wallets will auto generate a bunch of them and check, but if you've created thousands of them then it won't know to look that far ahead and you have to tell it to.

Yeah it is mostly feature complete which is why this is a silly problem. You update your IPFS version, your software that relies on it says it needs an older version, the software needs to be updated to change the IPFS version even though it can already probably worm with it.

Well it's the only way for the monerujo side of garnet to connect note IDs with transactions, it's not really a problem but there have been problems where a wallet has trouble syncing subaddresses that are really far ahead and it doesn't look far enough to see them. So you import your seed elsewhere, a transaction doesn't show and you have to manually set it to look further ahead for it.

"Mining's huge power consumption will send Texans' power bills through the roof," Bitcoin detractors screamed.

According to nostr:npub1hxwmegqcfgevu4vsfjex0v3wgdyz8jtlgx8ndkh46t0lphtmtsnsuf40pf over the past year, the load of mining in Texas has increased by 30 percent while the price of electricity has dropped by 80 percent.

We often underestimate how Bitcoin's real incentive lies in its intrinsic link to electricity.

That's because they refuse to understand economics. Not that they don't understand, but they actively refuse.

You show them this, show them they were wrong. They'll scratch their heads. You then explain to them why this happened, do you think they're going to update their mental model of how things work? No, because they don't want to believe your reasoning. They'll be making predictions based on their old model tomorrow, be wrong again, still pretending this is some unexplainable strange quirk and not a failing of their model about how the world works, not updating their model. There's nothing scientific about how they approach understanding the world.

If someone were to build a relay that required payment to fetch messages you could monetize that way.

Here's a pretty good example of a really silly problem that is common with IPFS https://github.com/mrusme/superhighway84/issues/38 if you integrate IPFS into your software you run into all kinds of silly compatibility issues when IPFS updates that really a library shouldn't have, that one issue is interesting but if you look at the other issues for that project, closed and opened, you'll find a bunch that are just caused by IPFS doing silly shit.

Just go to their website and look https://protocol.ai/ it's a bunch of hype buzzwords, AI, neurocomputing... it seems they're just following the noise of the day.

If they want to do that yes.

What's there to think about it? There's not enough information in the graphics to tell me anything about what they say.

Wow! Never would have thought that we would not have a democratic process on Nostr. I find it amusing that a few elitists get to decide where the next unconference is even though the overwhelming support was for Australia.

Why even bother asking if you were going to ignore us anyways!

nostr:npub16vrkgd28wq6n0h77lqgu8h4fdu0eapxgyj0zqq6ngfvjf2vs3nuq5mp2va nostr:npub1qny3tkh0acurzla8x3zy4nhrjz5zd8l9sy9jys09umwng00manysew95gx

Why would you never think that we would not have a democratic process on Nostr? What gave you the impression that we would? Have we ever?

I'm not outraged. I think it's great. The more the state tries to control it's people the more people will move their behaviors outside it's reach.

BCH has "instant" finality in that there's no replace by fee, so transactions can be trusted with 0 confirmations. There are trade offs here and I'm not exactly a fan of it but it is one way to do it.

The issue of finality is a very complex one. Technically no blockchain has 100% finality ever. Practically speaking, finality is a bell curve and depending on network security practical finality is possible after a handful of blocks. In XMR that's deemed to be 10 blocks as of right now. This is true regardless of proof of work or proof of stake and is a fundamental problem of achieving consensus in a decentralized way. Proof of stake could actually reduce the security of finality, because it rewards holders and not external expenditure, and so trends towards oligarchic centralization enabling a small number of nodes to potentially collude to break finality to their benefit. If staking nodes decide to do this there's no way to fork away from them, as they will have the same number of coins on the fork and so will be able to continue the attack, as opposed to PoW where you can bit flip the hash algorithm and thus boot the attackers permanently.

Monero will never adopt proof of stake. There are very good reasons for this that we could get into if you're interested, otherwise I'll just focus on finality time because that's the core topic you're talking about.

Proof of stake would not improve finality or block times at all. In Monero new unspent outputs have a 10 block lock time to prevent them from disappearing in a reorg. Proof of stake would not change this at all. That's the main time constraint on being able to spend coins you recently received, it is a pain but there are good reasons for it and research is constantly happening to try to eliminate this requirement, there are always discussions on changes to the cryptography and signature scheme to eliminate it but they so far have all had drawbacks and the community hasn't come to a consensus on a trade off. Eliminating this requirement though is a very popular thing, people want it but if it reduces security it's not a good idea.

Block time is a constraint designed to prevent orphaned blocks, nodes need to validate the new chain tip before the next block is mined, this is a constraint imposed by network latency and not by mining.

>Well you are just copying from my kind 0 when my kind 0 updates. And that is a lot of copying and republishing overhead.

Yes, but its not really more overhead than the original NIP-02 spec had (besides p tag relays being more than one), if you don't want the relays in kind 3 p tags to rot you have to update them frequently, it solves the bootstrapping as well.

> And yet you still have to find my kind 0 to do that, which was the original problem.

Yes, but it's much less likely I will fail to be able to now that I know that your p tag in my kind 3 will very likely have at least one valid relay.

> Unless you are saying that you would look into a bunch of other people's kind 3 lists to see if any of them know where I have moved to.

Yes, if you can't find a valid relay that has my kind 0 or 10002 from my p tag in your kind 3, then try from one of my followers' kind 3, move on to the next one and check relays you didn't see before and so on, that is of course in the event that your list of my relays has rotted into oblivion, it's a last resort. If you *never* find a valid relay that has my kind 0 or 10002 then the network is almost certainly completely split somehow.

> So what you are doing is creating a bunch of redundancy of my kind 0 information distributed all around the place. We could achieve the same thing more simply by just having relays copy/push people's kind 0 (or 10002) information to all the other relays they know about once they first discover a new version... a kind of relay-to-relay propagation... or even have clients blastr the thing in the same way.

But there's no method to that, you just hope the network is not fragmented, that approach is less robust, and youre burdening relays that have nothing to do with the people involved, hoping they won't just drop these events. It seems to me that this is way more duplication overhead because even relays that don't care otherwise still have to be trusted to participate in storing and relaying kind 10002 events for (I don't know how much) less robustness. This way, you only publish your kind 0 or 10002 to your relays, no blastring required. Just changing the kind 3 p tags to have multiple relays would make this work, everything else is just client behavior, if you use kind 10002 instead of changing kind 0 to have that information inside, although that seems maybe like a slightly better idea.

Anyway I just like chewing on ideas, it seems like a good idea to me and seems more robust and "correct". I don't expect it to be implemented and I'm not picking fights in the nostr world shouting about how everything should be done my way, but it's fun to think about different ways to solve different problems and ponder on how a protocol could work differently and how those differences might make things better and/or worse on different ways. Maybe you're right, maybe blasting your 10002 is practically always sufficient, isn't that much of a bother to relays who already are tasked with relaying messages from every direction, maybe it's highly improbable that the network will fragment enough to make it a concern that needs to be addressed more elegantly, maybe the bootstrapping issue isn't really much of a centralizing force at all.

So, suppose the p tags on *my* kind 3 could each have multiple relays like you said you tried for that I quite like. And suppose your kind 0 event had a list of relays that was updated by your client every time you added or removed a relay, just like if you changed your avatar or something. Could be kind 10002 as well, but I'm trying to simplify the protocol in my mind, rolling the contents of kind 10002 onto kind 0. If the relays in your p tag in my kind 3 were updated by my client when they don't match your kind 0 (or 10002), then we don't really have a rot problem or a bootstrapping problem, right? The likelihood that your followers' p tag for you in their kind 3 are out of date is very low, the likelihood that nobody can find your list of relays from just a couple of your followers' kind 3 events is extremely low, and if clients MUST update their kind 3 p tags from your 10002 or 0 in my hypothetical idea, then it looks like those two problems are practically solved, though I know not perfectly solved since there is a small chance that none of your followers have a single relay for you that you still broadcast to and therefore that will have an up to date kind 0 or 10002 event for you. To me this seems much more robust and less dirty than the brute force hack you just told me about.

Garnet users, are you aware that Garnet creates a new subaddress for every single note and reply that you do?

I'm thinking this will be a problem for lookahead down the road. Anyone have thoughts?

#XMR #Monero

Also, where do you bootstrap fetching relays from? So you've got to ask some relays for kind 10002 notes, is it standard to ask your relays from content of kind 3 for them for your follows? What happens if you find none, doesn't that fracture the network?