https://github.com/KillianLucas/open-interpreter

#AI #OpenSource #LLM

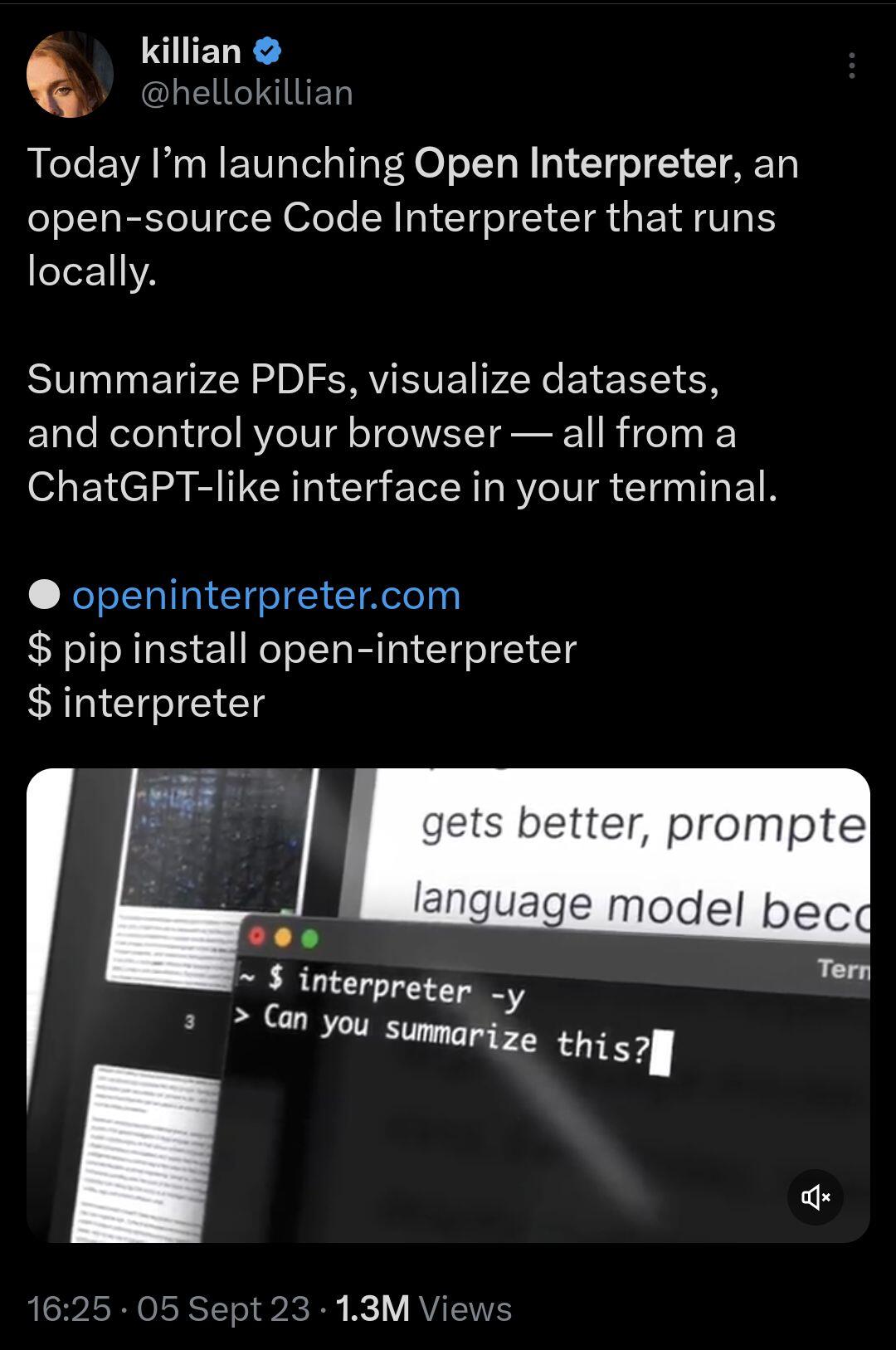

Open Interpreter

Run OpenAI Code Interpreter, Code Llama, or any LLM model directly in your CLI and it can execute code for you! It also can access the internet and read documents. Definitely worth a try.

Simple and fun use-cases make it popular

Runpod accepts Bitcoin, but only through crypto . com, so kind of useless. I've yet to come across a good cloud GPU service that respects privacy.

I wish I had 360gb of V/RAM to run the 13B model at 128k context 🫠

Nice to see the context window being pushed though.

Time will tell whether closed-source, high parameter "all-in-one" models win, or if smaller open-source models that are focused in more specific areas (i.e. coding, chat, reasoning, etc) can remain competitive.

I'm certainly rooting for the "GPU poor".

Ok, I had to Google this one 😅

Are they still working on this? I'd love to see more competitors to Llama in the open source space.

nostr:npub1lj3lrprmmkjm98nrrm0m3nsfjxhk3qzpq5wlkmfm7gmgszhazeuq3asxjy I’m running llama2 and code lama locally on my laptop. Lot of fun. I think only the 7b models. Wonder if I could run 13b I have 24 gb ram.

Really want to be able to feed it docs pdfs etc. currently only runn inch in command line via Ollama

You could give GPT4ALL a try. It has a built in plugin that can reference local docs. I find it does a good job summarizes concepts, but not so great at pulling out specific information.

24gb is sufficient to run 13B models at 4 or 8 bit quantization, and some will fit at 16bit 👍

Context window is a bit of a hurdle to be overcome, especially for chatbots and storywriter models.

Commercial LLMs have the upper hand in the early stages, but open-source models have so much potential... And you won't have to deal with restricted access.

And the most exciting things are ahead. This space will probably look completely different in only 6 months 😂

nostr:npub1a76gf5pyqlwrsl96y6m7x80h7y2uhx5h2qcm62r63q6ppw5l68kqu87y46 is based on GPT4All (I would need to lookup the exact model and I'm not on my laptop right now)

GPT4ALL is a great program. I really hope they continue to expand it's capabilities with more plugins.

ChatGPT is the benchmark that everyone is chasing and for good reason!

And yes, the 🦙 models are meant to be run locally on your own computer, but requires a powerful machine. You can checkout huggingface.co/spaces and search for a model to try it out for free (although there might be queue).

There seems to be at least some people on Nostr that are interested in Large Language Models.

What does everyone use right now? ChatGPT4? Llama2? Bard?

I'll go first. My top model from a reasoning standpoint is Platypus 70B which does a really good job with complex reasoning.

I've also been really liking CodeLlama 34B lately, although not as good with complex problems.

#AI #LLM #ChatGPT

For the record, I hate the term Artificial Intelligence to describe LLMs and Diffusion models, but that is the term the industry is using 🤷

Test it by following the steps here:

This one slipped under the radar for me

Meta released SeamlessM4T, an open source translation model that can translate text or audio into over 100 languages. Imagine having Google Translate running locally on your PC or smartphone 🤯

#AI #OpenSource

Awesome. I was wondering whether it was worth it to grab some extra system RAM to try a 70B model on CPU only, but it runs just as slow as my CPU w/ GPU offloading.

🤷 I'll just have to wait for used 3090s to come down in price.

Meta released Code Llama yesterday. Admittedly I'm not the best person to attest to it's capabilities, but the 34B model seemed really solid compared to other open source coding LLMs. Give it a try!

#AI #LLM #OpenSource #coding