nostr:npub1xtscya34g58tk0z605fvr788k263gsu6cy9x0mhnm87echrgufzsevkk5s nostr:npub1gcxzte5zlkncx26j68ez60fzkvtkm9e0vrwdcvsjakxf9mu9qewqlfnj5z

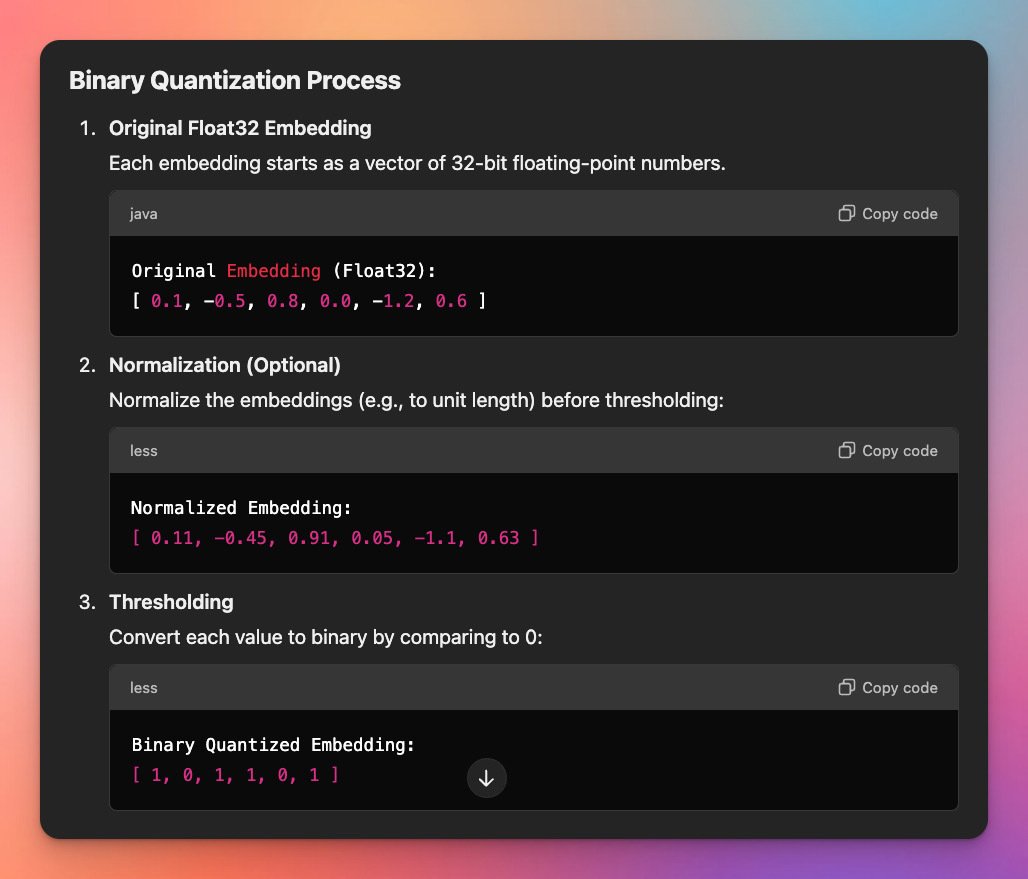

Can anyone teach me how to do this? https://emschwartz.me/binary-vector-embeddings-are-so-cool/

There is so much jargon about this stuff I don't even know where to start.

Basically I want to do what https://scour.ing/ is doing, but with Nostr notes/articles only, and expose all of it through custom feeds on a relay like wss://algo.utxo.one/ -- or if someone else knows how to do it, please do it, or talk to me, or both.

Also I don't want to pay a dime to any third-party service, and I don't want to have to use any super computer with GPUs.

Thank you very much.

Discussion

i was looking at this same article the other day, been thinking about it...

hugging face always has good blog posts too https://huggingface.co/blog/embedding-quantization#retrieval-speed

Looks simple enough. I imagine you could even go further with a sparse encoding scheme assuming there are huge gaps of 0 bits, which is probably the case for high dimensional embeddings.

curious if it’s being used anywhere yet

I hear my laptop's fans start whirring around when its making a response, I wouldn't be surprised if its doing something locally first. Either the encoding process (words to tokens) or the retrieval (finding relevant documents from a project)

retrieval maybe?

btw have you seen https://www.mixedbread.ai/blog/mxbai-embed-large-v1

wait nvm encoding should be first no? Since converting words to tokens is usually needed before retrieval unless the retrieval uses pre-computed embedding, maybe it skips straight to that? Idk

Think of how you 'hold a memory', no one else can interact with it unless you talk about it or draw it, a memory or idea needs to be conveyed somehow to the outside world. An encoding models that produce embeddings are basically half an LLM, it takes in the words/tokens and says "this string is located here", it assigns a coordinate, an address for it. That address is the context, anything with addresses nearby are more related in ideas. The second part of the LLM is the decoder, where it takes the address as a kind of starting point. The decoder uses the context of that coordinate and responds with words that are also in the right context (which is learned by training).

H.T. to nostr:npub1h8nk2346qezka5cpm8jjh3yl5j88pf4ly2ptu7s6uu55wcfqy0wq36rpev for his fantastic read of "A gentle introduction to Large Large Language Models"