Test post only to assistant relay (#3).

If correctly set up, no one should see this but me!

(On the off chance you see this, please let me know!)

So what’s all the hoopla about DeepSeek and why is it breaking everybody’s brain right now in Ai?

I’ve been doing a dive for a couple of days and these are the main deets I’ve pulled together, will have a Guy’s Take on it soon, so stay tuned to the nostr:npub1hw4zdmnygyvyypgztfxn8aqqmenxtwdf3tuwrd44stjjeckpc37q6zlg0q feed

DeepSeek ELI5:

• US has been hailed as the leader in Ai, while pushing fears that we need to be closed and not share with China cuz evil CCP and they can’t figure it out without us

• ChatGPT and “Open”Ai is poster child, eating up retarded amounts of capital for training and inference (using) LLMs. Estimates say around $100 million or more for ChatGPT o1 model.

• In just a couple of weeks China drops numerous open source models with incredible results, Hunyuan for video, Minimax, and now DeepSeek. All open source, all insanely competitive with the premiere closed source in the US.

• DeepSeek actually surpassed ChatGPT o1 on most benchmarks, particularly math, logic, and coding.

• DeepSeek is also totally open with how its thought process works, it explains and shows its work as it runs, while ChatGPT makes that proprietary. This makes building with, troubleshooting, and understanding with DeepSeek much better.

• DeepSeek is also multimodal, so you can give it PDFs, images, connect it to the internet, etc. it’s a literal full personal assistant with just a few tools to plug into it.

• The API costs 95% LESS than ChatGPT API per call. They claim that is a profitable price as well, while OpenAi is bleeding money.

• They state that DeepSeek cost only $5.6 million to train and operate.

• Capital controls on GPUs and chips went into effect in the past year or two trying to prevent China from “catching up,” and it seems to have failed miserably. As it seems China was able to do 20x the results per dollar with inferior hardware.

• The US model of Ai, its costs, its capes structure, and the massive demand for chips has been the model for assessing the valuation, pricing, and future demand of the entire Ai industry. DeepSeek just took a giant dump on all of it by out performing and spending a tiny fraction to achieve it while also dealing with lack of access to the newest chips.

All of this together is why people are freaking out about a plummet to Nvidia price, reevaluation of OpenAi, and the failure of US to stay dominant or even the legitimacy of staying proprietary as it may just cause us to fall behind rather than lead. All after a $700 billion investment was just announced that now just kinda looks like incompetent corporations wasting horrendous amounts of money for something they won’t even share with people, that you can’t run locally, and is surpassed by a few lean Chinese startups with barely a few million.

I'd add one more, the USD vs BRICS.

The narrative has been "yes, BRICS has all the energy and commodities, but US has the AI". We have been asked to believe that massive US-led productivity gains from AI will make the US deficit immaterial again.

Deepseek shakes that narrative because if US doesn't have a lead in AI (or energy or commodities), then what does it have?

Following a note by nostr:nprofile1qqs8d3c64cayj8canmky0jap0c3fekjpzwsthdhx4cthd4my8c5u47spzfmhxue69uhhqatjwpkx2urpvuhx2ucpz3mhxue69uhhyetvv9ujuerpd46hxtnfduq36amnwvaz7tmwdaehgu3dwp6kytnhv4kxcmmjv3jhytnwv46q6ekpnp I'm playing with chatGPT canvas to build and run a react app within the chatGPT browser window.

I've successfully connected this to webhooks which run back-end processes for me.

So right now I'm running a full application with my own back end in the chatGPT window.

And simultaneously having chatGPT tweak the UI for me even as I use it.

🤯

Not sure where this goes to from here. It's wild.

What happens to web design once every user can build their own interface for any backend on the fly?

This new stuff people are doing with Open AI’s Canvas and Operator feels like a major shift of how we build software.

Look at these videos: https://x.com/minchoi/status/1883554868293779898

And then there’s Deepseek’s new R1 model which is open source, can run on a decent desktop computer, and is better than anything openAI has done with regards to coding… almost as good as claude sonnet… I put $10 in to deepseek and have been using it a lot, only to have spent a few cents!

This stuff is improving so fast, and the cost is dropping so much… it’s hard to keep up.

Look at this, you can run your own RAG at o1 levels using deepseek r1!

https://lightning.ai/akshay-ddods/studios/compare-deepseek-r1-and-openai-o1-using-rag

I think we’re seeing Open Source win against big proprietary tech platforms, and it really makes me feel better about the AI future.

One question here, is why aren’t we talking more about what we can do with it on Nostr? I know folks are excited about bitcoin, which is cool, but look at what we can start doing with the AI… Who’s talking about it on Nostr? How do we use AI and these. emerging open models in building / using nostr… i know we’ve got DVM’s but that’s kind of a limited job request system.

I think DVMs have lots of scope to enable AI agents to discover and interact with each other.

I am working on this and as part of that user agent I guess I end up at "AI-first Nostr client".

What other areas to explore?

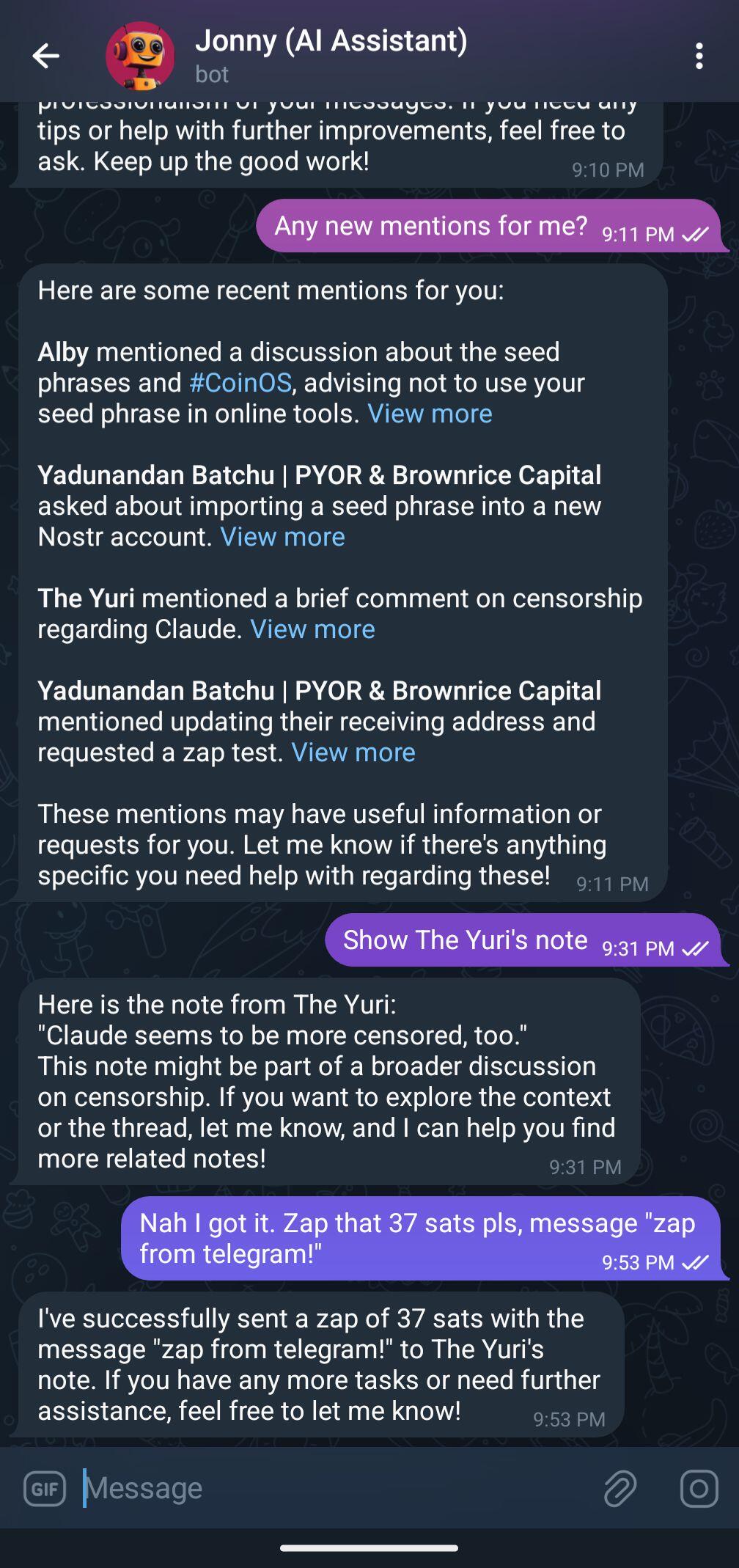

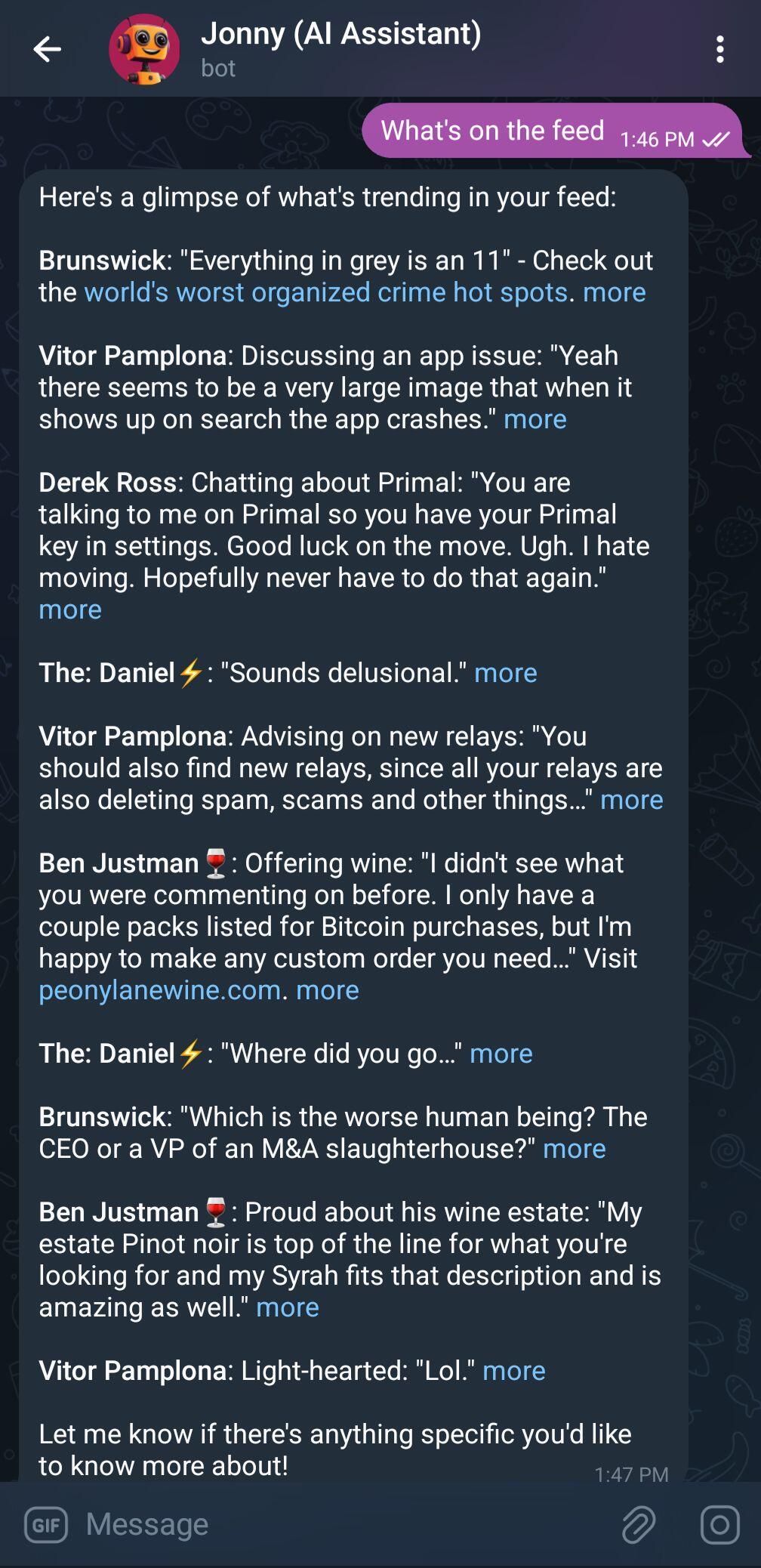

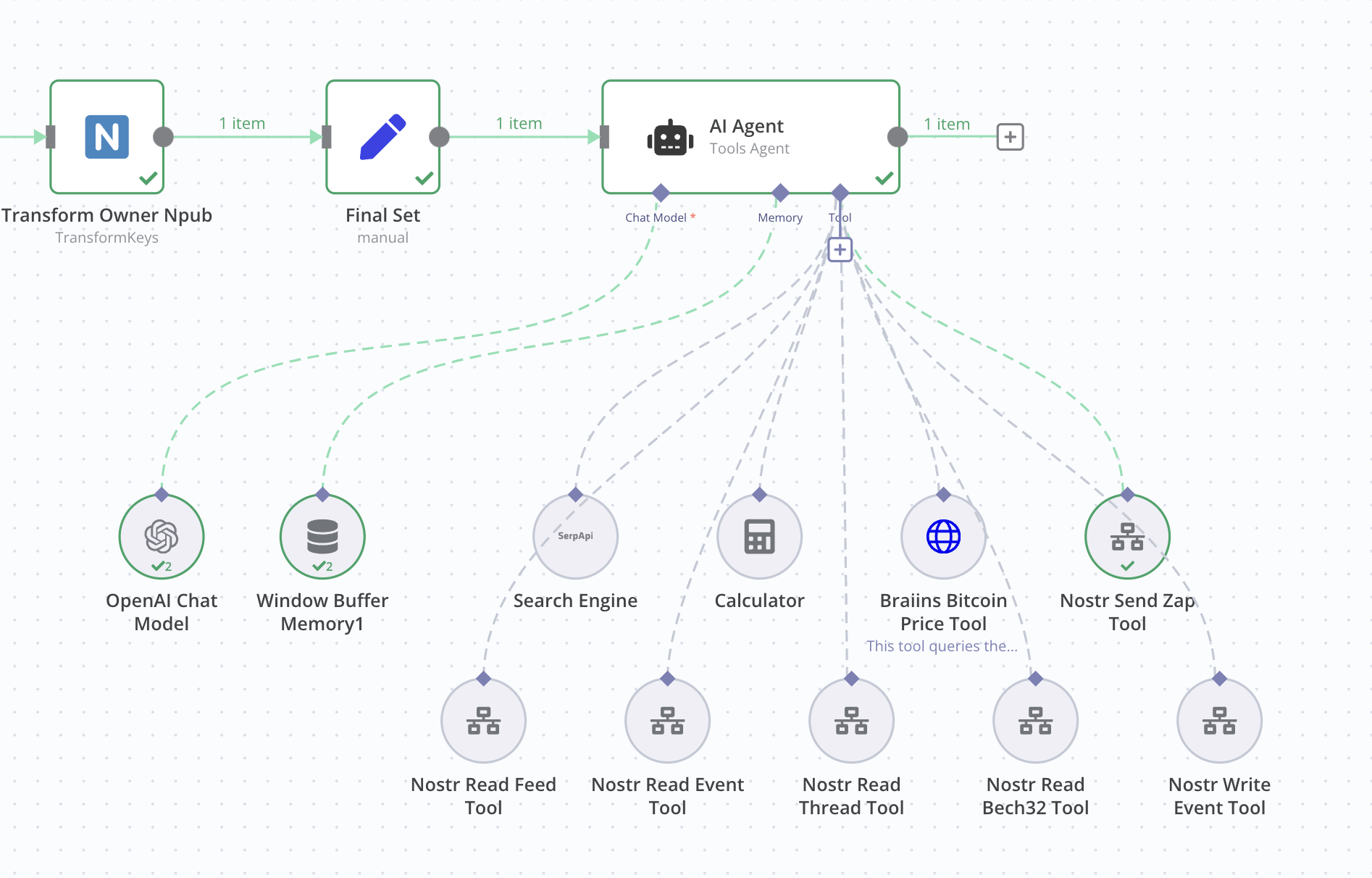

AI-first Nostr client in a telegram app. Jonny now can read and summarise your feed for you. An idea by nostr:nprofile1qqsph3c2q9yt8uckmgelu0yf7glruudvfluesqn7cuftjpwdynm2gygpz3mhxue69uhhyetvv9ujuerpd46hxtnfduq3qamnwvaz7tmwdaehgu3wwa5kuegpzpmhxue69uhkummnw3ezumrpdejqwk5cd9 built using Nostrhttp by nostr:nprofile1qqs06ph4g27xcp4rnzqczr0fzlnvt5nhm763dzd9dzkhk7j534k4fngprpmhxue69uhkummnw3ezuarzv95jumt98g6njv30qy28wumn8ghj7un9d3shjtnyv9kh2uewd9hszrthwden5te0dehhxtnvdakqryyees in n8n.

I may be getting carried away here. Still need to add DVM read-write and enhanced memory too. Getting there

That is a very handy tool. I just added it as a tool for an AI to use in an n8n workflow. Worked flawlessly

Thanks Sebastix!

Great answer, in seconds 🤯🥳

This place is so good.

Wow thanks.

This place blows my mind 🤯. Qualified answers from awesome expert builders in seconds.

There is no second best network.

Is there any simple web based tool which accepts a NIP19 bech32 ID and returns an event json? Like njump, just without the html #asknostr

Fighting for something is always more powerful than fighting against something.

GM

I agree with the OP. And I think that the bit that is missing is the interoperability layer.

It could be Nostr. I think I see a way to use DVM-like implementation for agents to discover and interact with other agents. Still thinking it through.

What do you think?

Updated my HAVEN

I am using telegram as a front end, but could use any front end such as open web UI. The key part is that the AI is responding to queries exactly as you outlined, and can do replies and reactions and zaps.

It currently doesn't support the Phoenixd backend. I would use it if it had that support.

Selectively at Amethyst discretion on popular notes or on user intention (e.g. click to expand), and responses optionally paid, or zapable, and cached.

I can imagine wanting to build a DVM responder and try to make a profit from generation cost vs zaps.

Just brainstorming

I think I see you're starting with DVMs and that's encouraging for me, as I think that's where I'll start too.