During nostr:npub1sg6plzptd64u62a878hep2kev88swjh3tw00gjsfl8f237lmu63q0uf63m's talk with nostr:npub1qny3tkh0acurzla8x3zy4nhrjz5zd8l9sy9jys09umwng00manysew95gx he mentioned that nostr isn't doing enough to prevent tracking. Does anyone have more information on this? I thought that accessing it over tor was sufficient, so is tor still not enough, is tor unable to access nostr again, or is using tor itself too difficult? #asknostr

The only thing a blockchain can protect is its own history. When you put something else "on" it, you need an authority to enforce ownership. Once you have an authority, there's no benefit to a blockchain over a centralized database / Merkle tree.

nostr:npub1sg6plzptd64u62a878hep2kev88swjh3tw00gjsfl8f237lmu63q0uf63m's opening got me. Had to slow it down and type it out after I got that sand out of my eye. #HtP

ODELL: So while we're on that topic, why do you focus so much time, energy, money, on nostr?

JACK: It's... The only reason I'm in a position that I can even consider doing something like this is because of the grace of the open source developers on the Internet. And the way that the Internet was built... for those of you who are as old as me, you remember Usenet, you remember the early days of Linux, you remember gopher, you remember the early days of the web, you remember the principles.

But most importantly you remember that you could read the code. And that was incredible. The fact that all these APIs were open, the fact that RabbleXVF brought this up, that TCP/IP. the very foundation of the Internet, was considered the ugly, wrong choice, and people made it work. And every single interaction we have today, in the world, every single person in the world, has an interaction today where they touch that work. And they touch the concept of the bazaar model instead of the cathedral.

We have so many companies and we have so many organizations trying to build this cathedral model, and control it, and take more and more so that they can profit more and more. And we need to have an opinionated and different approach that we build in parallel. For when those things fail, which they will, we have some place that people can go do. And that's what gives me a lot of patience around what everyone is building here, because like, our only goal here is just to build in parallel. And make sure we continue to build something that, when people need it, it's available. And I think that's really important that we always have those options available to us. The same is true for Bitcoin.

There's a lot of talk about how censorhip resistence is important, and a lot of talk about censoring, a lot of talk about freedom of speech and all these things, but no one has proven that they actually want that. If they actually wanted it, they'd be using it every single day. And it's a lot of... it's really good marketing right now, but if you actually want to see if people believe in it, believe it in their soul, they're going to act, and they're going to do something about it. And we're just not seeing it right now. But when they are ready, or when they're forced to be ready, or when their mind's finally open to take another action and sacrifice the convenience they have right now, and the network they have right now, that this place is ready, and that Bitcoin is ready, and it's up to the task of supporting billions and billions of people.

So it's a long haul, and it's something that we might not even see the entire fruits in our lifetime - I think we will - but the fact that we have this collection of people working on this, and working on these ideals, and thinking about the long term, and suffering through the small numbers right now, I think is really important, really unique, and, I dunno, I love it. I love being part of this community.

[ODELL raises a hand to the audience, who claps]

JACK: Matt [ODELL] is very big on clapping, and getting energy back from the audience, so, I don't care, so, any time he stops talking if you could give him some energy back and, I'm good. nostr:note17zu0ure4yz3vvemcdx28gp4qndgh8rh2k2wsgrjeanzkmch7ehdsd47f0u

By providing orthogonal value, it doesn't need to be "as good or better". It can't be terrible though.

"In some sense what machine learning is doing is to “mine” the computational universe for programs that do what one wants."

This is the crux of AI to me. There is a constant refrain that models are “just doing X”, where X is usually “predict the next word”, but the reality is that we have no idea what the models are doing. We only know that they access many gigabytes of memory, make billions or trillions of calculations, and after this immense amount of work, then they predict the next word.

#llm #ai

Every time I see AI mentioned it’s either “wired” or “tired”. Lots of people casting their votes in both camps, but the one that bothers me the most is the people who have been programming since the 90s throwing shade. “We saw this decades ago, and it’s happening all over again! I have to rewrite everything that comes out of an AI!”

Which as far as I can tell is exceptionally nearsighted. First, those AI algorithms in the 90s were terrible hacks that happened to work. Do you know how SIFT feature detection works? It’s amazing that it provided anything useful at all, so it’s not surprising that it had its limits. N-gram word prediction? Sure it strung words together, but the results were meager. Looking at ChatGPT and recalling ELIZA? Tell me more about that thing that you read about in college, and it was already lore by then.

If you don’t see the difference between then and now, let me summarize in three words: deep neural networks. All of those 90s era algorithms were weird hacks, but they were weird hacks that we could understand. No one was unsure of how to fix a broken Baysian network. You built one, cases where it didn’t work, and improved the model. Then came deep neural networks.

No one liked deep neural networks. Not because they were new, but because you couldn’t understand them. They were magic. There was no class on hand tuning hidden layer weights. There’s no step debugger, or optimization process. The only reason they were even useful is because they trained themselves. To the extent that a programmer made them, it was by experimenting with connectivity and training methodology. They were worse than magic, they were black magic.

And now, now that the incomprehensible black magic, made of essentially a trillion if statements, can string together some code, the people who know the most about that work look at the results and say, “eh, it really isn’t that great”.

They’re not wrong. Even the best models struggle with basic things. A good programmer can plan circles around them, understand the underlying value that’s being delivered, conduct user testing, manage a project timeline, etc. Even the best model isn’t going to compete with a good developer.

Then finally they conclude, without the slightest hesitation, “these are hard problems that we just don’t know how to solve yet.” And this is the cherry on the proverbial sundae! Yes! You’re right! We don’t know how to solve these problems! But we also don’t know how to build the one you’re using right now, because we don’t build them… they train themselves.

Of course, they wouldn’t exist without us. And they tend to get better when we change things. But the way that we improve them is more like chemistry than programming. We think about the fundamentals, make a hypothesis, tweak something, then measure the output. Nothing about tweaking the number of training tokens, layer shapes, connectivity, activation mechanisms, or energy models, requires you to be better than the best staff engineer in the world. You don’t need to derive the rules for writing better software, or how to be creative. All you need to do is know what networks have been tried, and come up with a new one that might learn a little better. Because, the models teach themselves.

Phew!

Ok.

Now tell me how foolish I am to think they might keep getting better for a while.

Seems risky to bring a not-a-gun to a gun fight. And if it wasn't before, it is now.

It's less magical. Sometimes you might want magic though?

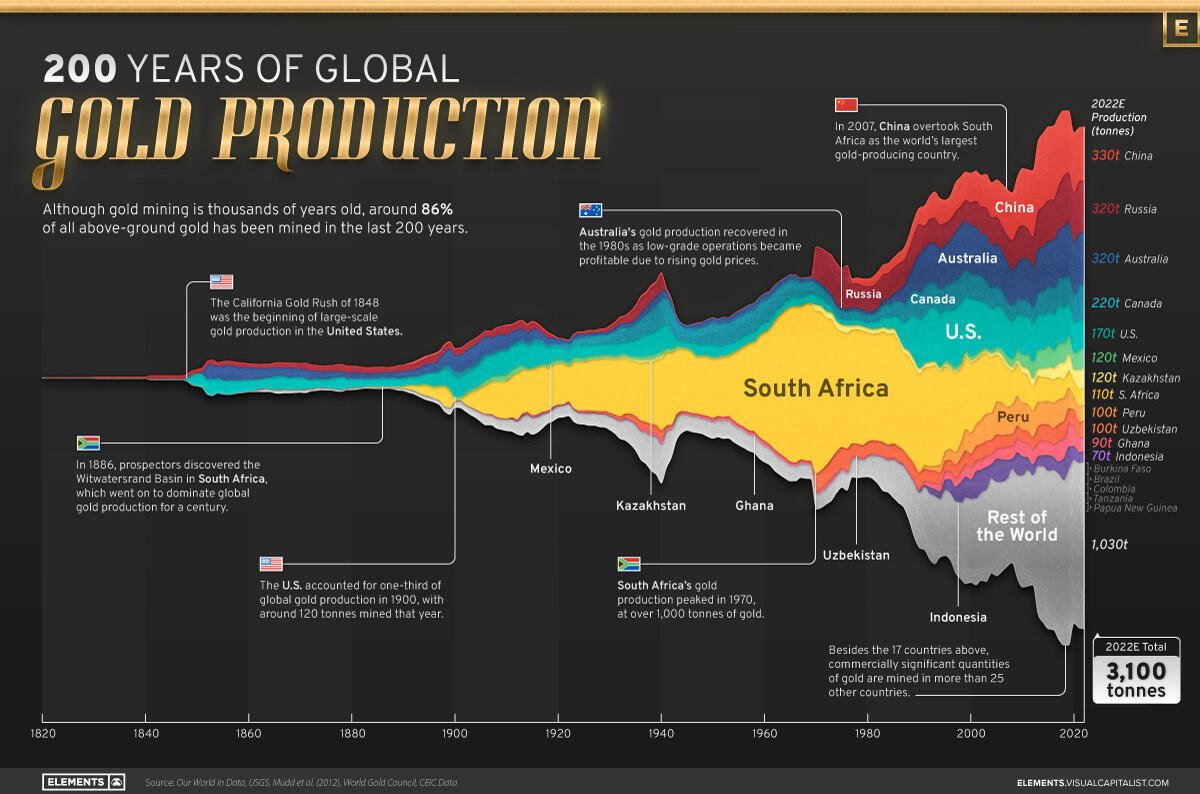

Also good to keep in mind when people tell you that the "real" winners during the gold rush were the ones selling pickaxes.

What makes gold valuable is its limited supply

This is the future we need. Zed.dev can use this to provide a Snow Crash Librarian experience 100% locally. Configure a local web proxy that denies anything other than localhost and not a byte leaves your laptop.

There is a constant refrain that The Government causes inflation. I think this is to encourage austerity instead of monetary reform.

Permissionless until it was dominated by Google

Just realized that "first post" has fallen out of favor now that there aren't as many new things to post on.

Accurately or not, that is the data driven hypothesis of "A Chemical Hunger": https://slimemoldtimemold.com/2021/08/18/a-chemical-hunger-interlude-e-bad-seeds/.

The only thing a blockchain can protect is its own history. Anything other than this requires an external authority. Anything that requires an authority belongs in a database.

It's not exactly a remake, just a recut and remaster. It's more vibrant and dramatic, in line with the new seasons, and portrays Berlin differently. But it felt more real to me, and at the ending was more satisfying.

You haven't really watched La Casa de Papel until you've used a VPN to watch the original Spanish TV cut. Slower, less brash, more real.

I like what Netflix made it into, but I like the original even more. Unfortunately you only get Spanish with Spanish CC's using a VPN. Maybe there are other places to find it.