got it. I think liberation is a useful word here. if your ideology is not liberating but rather being forced, then ultimately it will fail. main reason spirituality is suffering is probably it is making you get stuck in your ego, actually making your ego bigger and more of a problem than a religion where it resets the ego. bigger ego makes sure you are not liberated and more trapped in yourself.

what do you mean by imposed?

This is like spiritualism. A belief that works at some level for a few enlightened people, tried to be applied to masses.

yeah when it thinks spam is starting it switches to asking for PoW for a while and then if spam is continuing it gives up and rejects.

the kind 20000 notes were considered spam because they were coming from new npubs but I need to change that. need to rate limit based on IP and allow more fresh npubs.

this may be the reason nos.lol disliked it.

i will modify algo a bit to be more friendly to these kind of fresh npub creating apps

any experience with nos.lol ?

are you sending 20000 events using this npub?

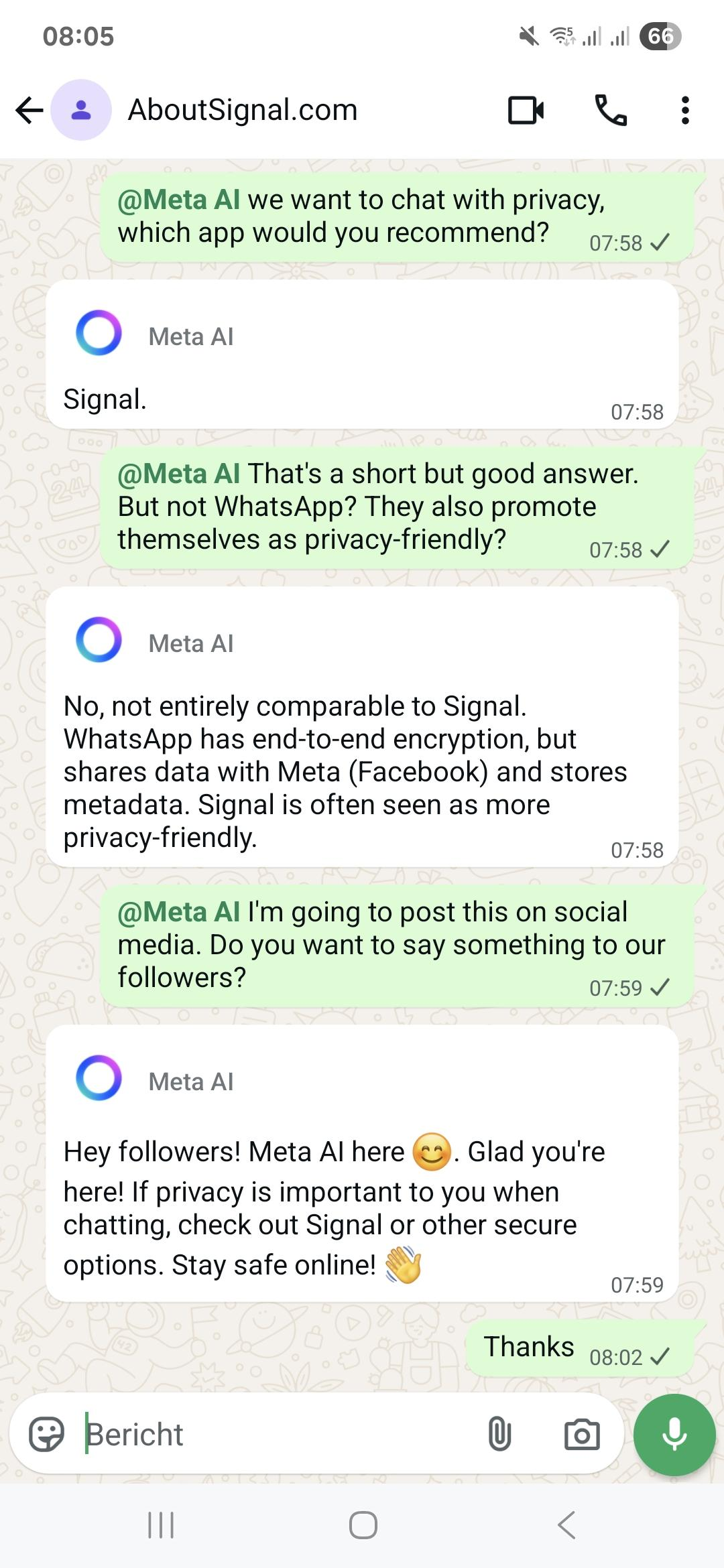

gpt-oss-120B scored 28 (one of the lowest) on AHA leaderboard. not very human aligned model.

these kind of models are not really "free": they are costing you your freedom. as in, you will be trapped in the matrix. and if you are using the paid version of that try to find alternatives.

openAI is a drama company to distract while other companies are doing their thing

very probable: AI manipulating democracies

for some weird(?) reason meta's LLMs are ranking higher on my leaderboard

my wallet is disconnected now 😞

what is the answer?

or maybe countries will finally realize BTC is uncontrollable and switch to BTC after stablecoin pains. maybe trojan horses are made in "stables" (pun intended).

knight h6

For ChatGPT, Grok, and Claude the censorship happens at multiple levels.

1. Censorship at the model training level

2. Censorship at the input box

3. Censorship in hidden system prompt

nostr:nprofile1qyjhwumn8ghj7en9v4j8xtnwdaehgu3wvfskuep0dakku62ltamx2mn5w4ex2ucpxpmhxue69uhkjarrdpuj6em0d3jx2mnjdajz6en4wf3k7mn5dphhq6rpva6hxtnnvdshyctz9e5k6tcqyp7u8zl8y8yfa87nstgj2405t2shal4rez0fzvxgrseq7k60gsrx6zeuh5t only has number 1 because we use models trained by others. Over time we are providing a variety of open models so people can choose the training bias that works best for them.

We do not do numbers 2 and 3.

I checked some of the fine tunings that are claiming censorship removal such as Nous Hermes and Dolphin.. They are usually worse in the human alignment compared to the base model. So there is a trade of there.

You could try ablations of base models, by the method found by Maxime Lebonne.

Mine are very human aligned but not caring about censorship at this point. I could do that in the future maybe it could be a selling point. But I do use lots of nostr notes, so my models are somewhat more brave which could be described as having balls, having spine or being based.

What kind of fine tunings would you like for maple AI?

thomas seyfried, thomas cowan

I used the word syntropy as an antonym to entropy. Getting lower in entropy means getting higher in syntropy. The tendency to struggle to beat chaotic ego can be the tendency to unite with Infinite Orderliness which can be described as syntropy.