It was an absolute highlight of my year to meet you and you inspire me

Haven't tried lovable yet for anything Nostr related. I was able to get v0.dev to allow a user to login via a browser extension and display their feed. I tried getting it to work with nostr-sdk js bindings, but in the end nostr-tools is all I could get working (maybe it's been around longer and was in the training data of the LLM; although I did try dumping many examples of nostr-sdk js code).

I'm curious which Nostr features you got working?

Yes! More of this!

nostr:note1v7z346a60eljs5e7xyd8mj6exger3auaftd43fsz0r937392mrsqqr6qd2

No, can you share that?

If you are following developments in AI, you have to follow Rao (unfortunately I don’t believe he is on Nostr yet).

This analysis on inference time compute being nothing new in AI is spot-on.

Scrolling on #olas is smoooooth! Nice job nostr:npub1l2vyh47mk2p0qlsku7hg0vn29faehy9hy34ygaclpn66ukqp3afqutajft

😢

Nice idea to publish the opensats progress report.

This MediaWiki extension we're working on https://github.com/Trustroots/mediawiki-nostr-is building on your work.

The first goal is to enable signups and logins to nomadwiki.org with trustroots.org through NIP-05.

GitHub link gives me a 404

google drive file and folder navigation is terrible UX. maybe it's a skill issue, but it takes me twice as long to find something than any other file system

I want to be able to filter any search anywhere by my web of trust.

If I had to pick first: video game reviews

Someone told me lululemon was chosen as a name to avoid the Asian market… because they can’t pronounce it 🤣

note1t5nku99y7q729lmjaq02vc7qqfu2s4lk308262e892m8qfgga0ns3q5p48

Guys can you stop posting for a second, I’m trying to find a note

He has the cool hair, taking me a while to find it one sec, I think it was trending this morning

Custom feeds on Nostr are a killer feature I never realized I wanted. Love the new primal UX to make your own, except that it feels too hidden. If I didn’t see a vid of someone explaining how to do it, I never would have known.

Triple checking a new db schema with rollups to avoid full table scans so we can get fast, live metrics of DVM activity when we’re at millions of DVM events an hour. Currently at a few hundred per hour.

The future is coming.

At first glance this looks like a wifi drone. But then the Ethernet cable ports don’t make sense. Now I want a WiFi drone

apparently just saying "please this is very important" helps

productivity tip for working with LLMs:

1. keep everything you've copied around with a tool like Copy 'em (on Mac)

2. star or favorite common context related to your LLM conversations

3. when prompting your llm, paste in relevant context like the codebase README, example files, etc.

I’m doing it all in pycharm and a terminal. So

much copy / pasting from that into Claude. GitHub copilot although it’s been disabled more often recently.

Once I understand or get some code

from these systems, I’ll take it back and review it myself, in the IDE.

I wish I could get cursor to work for me, but I find more and more that I rarely want an AI in my IDE auto suggesting stuff to me. And when I tried to chat with a few open source code repos with cursor, it always disappointed. I don’t think generic RAG or whatever they use in the backend works great for that use case.

Productivity gains are up and down. I iterate A LOT. The prompts matter. Dumping lots of context or code examples of how libraries seems to work often makes the difference.

The most useful thing for me is that I can get an AI opinion on anything now. It might be wrong, but I don’t have to start from scratch with anything.

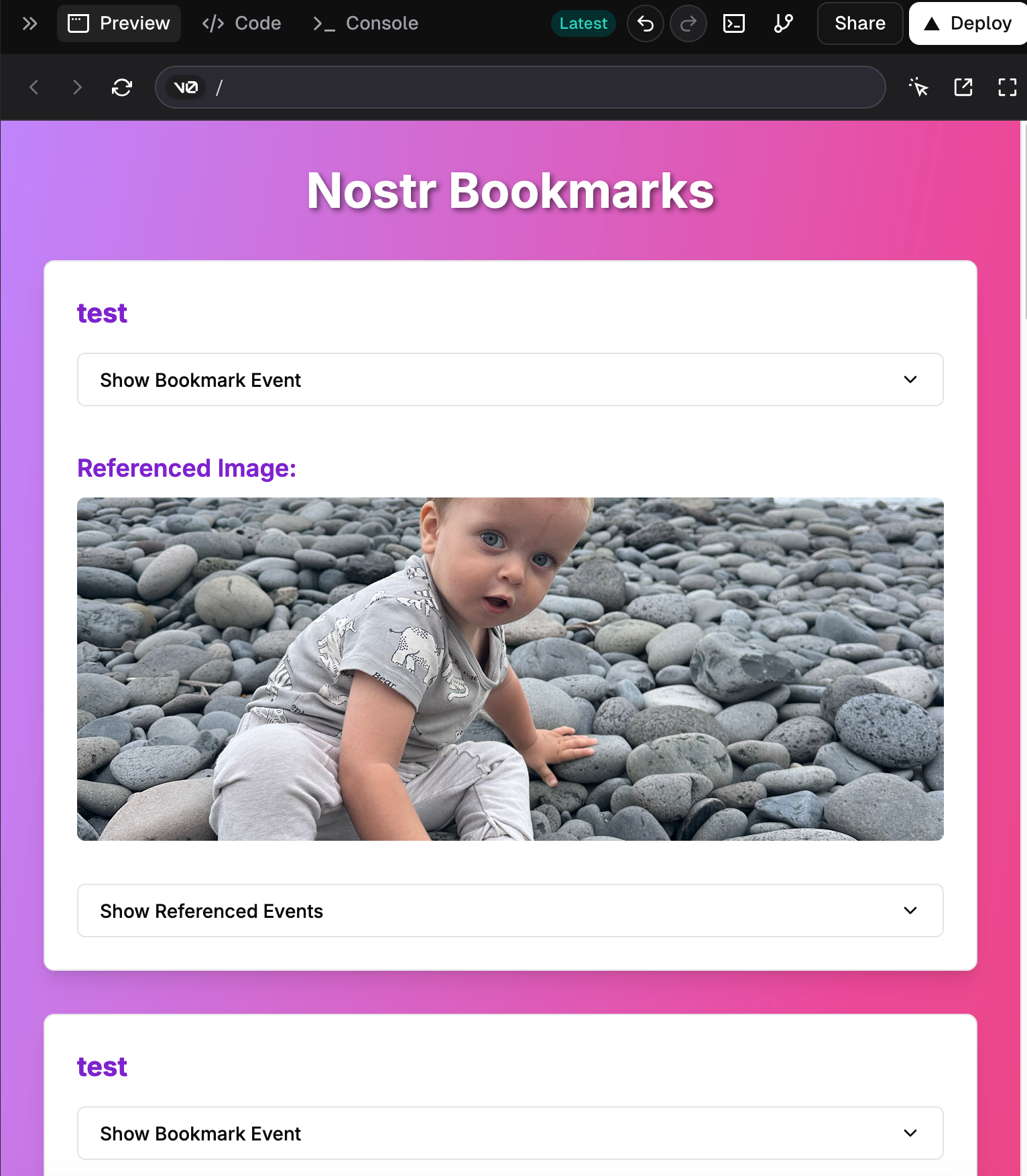

v0 and bolt.new seem to do better with nostr-tools than the nostr-sdk js bindings. It works even better if you paste the raw README of nostr-tools repo as "guidance" along with your prompt. I've been able to get v0 to make a simple react client that fetches all bookmark events (an apparently new kind 30006 that nostr:npub1l2vyh47mk2p0qlsku7hg0vn29faehy9hy34ygaclpn66ukqp3afqutajft made recently)

There's a new dev trend of adding an `/llms.txt` to your repo's root directory! Give LLMs all the context they need and save time while getting better answers - use it by pasting its contents into your chat before asking a question.

I'm working on one for DVMDash here, if you want to see a WIP example: https://github.com/dtdannen/dvmdash/blob/full-redesign/llms.txt

Consider adding the directory layout, the goals of the project, any domain specific background information, etc.

Claude sonnet: one of the best proprietary (if not best overall) LLM that exists. I use it to whenever I'm not already an expert at something:

- criticize my development ideas (such as redesigning the data pipeline behind dvmdash)

- generate first draft code prototypes (for example, I'll dump examples of Nostr code from nostr:npub1drvpzev3syqt0kjrls50050uzf25gehpz9vgdw08hvex7e0vgfeq0eseet 's nostr-sdk library relevant to the features I want)

- write first drafts of infrastructure stuff (dockerfiles, yaml config files, etc)

- debugging errors in server logs (i recently set up nostr-rs-relay for a DVM specific relay, and 8 hours later my relay went down. I dumped the logs into claude and it found that I needed to change two settings in my podman service)

- meme ideas and suggestions for meme templates I should consider for an underdeveloped meme idea

- looking up random terminal commands I don't remember (i.e. check the disk space on ubuntu, etc)

v0 is incredibly fun to play with to iterate on frontend designs. It renders the code as you chat and you can interact with the UI as you chat. I'm not a frontend person, so it's incredibly useful for me). I've been using it to quickly prototype some nostr app ideas.

Perplexity.ai:

- market research is the best use case I've found for it. Ask it about a market for a new app you want to build. Ask what competitors exist. Ask what people complain about each of those competitors. Have it estimate the potential market size, and put the data into a table. Ask it to update the table with a new column with new data. All of it is backed by citations to web links.

- it's my new go to search engine, it seems to be the only LLM-based search engine where you can follow up chat with it

- if claude or another LLM fails to answer something, I'll ask the same thing to perplexity, and if there's an answer online, it may find it

not saying i'm going to do it, but if I was, I'd probably:

- watch for all changes and proposals to nips on github

- keep a running list of proposals per kind

- query top relays for events per each kind

- compare events found on relays against the proposals

- create a document of some kind tracking each kind number and each nip, both whats happening on relays and via proposals

- let you chat with these documents via an LLM

resisting the urge to make an AI to answer all my Nostr NIPs questions....