when you say meme generator, do you mean it writes the content too?

nostr:npub1nxa4tywfz9nqp7z9zp7nr7d4nchhclsf58lcqt5y782rmf2hefjquaa6q8 have you seen any kind 5050 DVMs actually use these LLM params, like max_tokens, temperature, etc? I know you describe it here (https://www.data-vending-machines.org/kinds/5050/), and I just tested a kind 5050 dvm on noogle.lol, but it seems like we don't really have any LLMs using these params yet?

Thanks everyone from coming! The feedback was super helpful :) The next one will be April 23rd at 16:00 UTC!

Thank you for encouraging words!

I use a neutral system msg in the AHA leaderboard when measuring mainstream LLMs. All the system messages for the ground truth LLMs are also neutral except the faith domain for PickaBrain LLM: I tell it to be super faithful and then I record those as ground truth. Maybe most interesting words are I tell each LLM to be brave. This may cause them to output non-mainstream words!

The user prompts are just questions. Nothing else.

Temperature is 0. This gives me the "default opinions" in each LLM.

I am not telling the ground truth LLMs to have a bias like carnivore or pro bitcoin or pro anything or anti anything.

I see AI as a technology which can be either good or bad. I am seeing the good people are afraid of it and they should not be. They should play more with it and use it for the betterment of the world. There are many luddites on nostr that are afraid of AI and probably dislike my work. I think movies are programming people to stay away and leave the "ministry of truth" to the big AI. Hence AI may be yet another way to control people..

I always claimed in my past Nostr long form articles that AI is a truth finder and it is easier to install common values, human alignment, shared truth in it than lies. A properly curated AI will end disinformation on earth. And I am doing it in some capacity. You can talk to my thing nostr:nprofile1qy2hwumn8ghj7un9d3shjtnyv9kh2uewd9hj7qgswaehxw309ajjumn0wvhxcmmv9uq3xamnwvaz7tmsw4e8qmr9wpskwtn9wvhszrnhwden5te0dehhxtnvdakz7qg3waehxw309ahx7um5wghxcctwvshsz8rhwden5te0dehhxarj9eek2cnpwd6xj7pwwdhkx6tpdshszgrhwden5te0dehhxarj9ejkjmn4dej85ampdeaxjeewwdcxzcm99uqjzamnwvaz7tmxv4jkguewdehhxarj9e3xzmny9a68jur9wd3hy6tswsq32amnwvaz7tmwdaehgu3wdau8gu3wv3jhvtcprfmhxue69uhkummnw3ezuargv4ekzmt9vdshgtnfduhszxthwden5te0dehhxarj9ejx7mnt0yh8xmmrd9skctcpremhxue69uhkummnw3ezumtp0p5k6ctrd96xzer9dshx7un89uq37amnwvaz7tmzdaehgu3wd35kw6r5de5kuemnwphhyefwvdhk6tcpz9mhxue69uhkummnw3ezumrpdejz7qgmwaehxw309uhkummnw3ezuumpw35x7ctjv3jhytnrdaksz8thwden5te0dehhxarj9e3xjarrda5kuetj9eek7cmfv9kz7qg4waehxw309ahx7um5wfekzarkvyhxuet59uq3zamnwvaz7tes0p3ksct59e3k7mf0qythwumn8ghj7mn0wd68ytnp0faxzmt09ehx2ap0qy28wumn8ghj7mn0wd68ytn594exwtnhwvhsqgx9lt0ttkgddza0l333g4dq0j35pn83uvg3p927zm29ad0cw9rumyj2rpju It is the same LLM as in pickabrain.ai

Have you read anything by Subbarao Kambhampati? He has a lot of great posts that cut away at AI hype language, here’s a recent snippet about alignment:

“IMHO, the problem with that recent Anthropic study about "unfaithful chains of thought" is that they, like a big part of the alignment contingent, ascribe some sort of intentionality to the model. As I wrote two months back, there is actually no reason to expect semantics for the intermediate tokens, or any causal connection between the intermediate tokens and the result.

While conjuring up "deception" or "malice" is certainly good for AI Alignment business, it may not actually be needed when intermediate tokens are just intermediate mumbles that the model is trained to produce just to increase its chance of stumbling on more correct solution tokens.”

Didn’t know you were the one who wrote this! The spirit of what you are doing is great. Given LLMs are token predictors that are configured with system prompts, and are designed with tradeoffs in mind (better at coding or writing), what do you think about considering system and user prompts when measuring alignment?

Alignment is becoming so overloaded, especially with doomer predictions like https://ai-2027.com/

GM. AI misalignment is not inevitable, don’t believe the doomers

GM. Playing with nak today

The first DVMDash Community Call will happen April 9th, at 16:00 UTC on HiveTalk: https://hivetalk.org/join?room=DVMDash_Community_Call join us to chat about anything DVMs! #rundvm

Given Nostr is so international, it would be cool to add your timezone in your profile. Wdyt?

DVMDash Community Calls Coming Soon! 🚀

I'm excited to announce regular community calls for DVMDash starting soon! This is your chance to:

- Discuss project developments and roadmap

- Share feature requests and ideas

- Connect with other DVM enthusiasts

- Get your questions answered directly

Help us schedule at the best time!

If you're interested, please comment below with:

- Your level of interest (definitely attending/maybe)

- Your preferred time range in UTC (e.g., "UTC 14:00-18:00")

- Any specific topics you'd like to discuss

Planning to host these every two weeks to maintain momentum while respecting everyone's schedules. Let's build a stronger DVM community together!

#DVMDash #CommunityCall #DVM #OpenSource

What's your favorite Nostr client to make a poll, outside of amethyst? Never done it before

Maybe silly question but, if we have private 1-1 DMs and group DMs working on Nostr, could that replicate the experience of email?

GM. Understeeped tea > oversteeped tea

Why can’t maintaining a production deployment be as fun as a building a prototype 😩 gotta test10x more before deploying to production

Is goose better than cline?

For perspective, perplexity.ai raised over 900 million dollars and it fails 1/5 of the times I try to use it

nostr:nevent1qqs8p4qscjwldwnrhhz5qqmnq2xpjxv2xtx3gtqnzzjsnc6mmvvfy6qwla3m7

GM

Added dark mode to https://stats.dvmdash.live/

What’s a “Nostr chat sessions”?

This is cool. Would you consider doing a logo for DVMDash (a Nostr project monitoring AI microservices)?

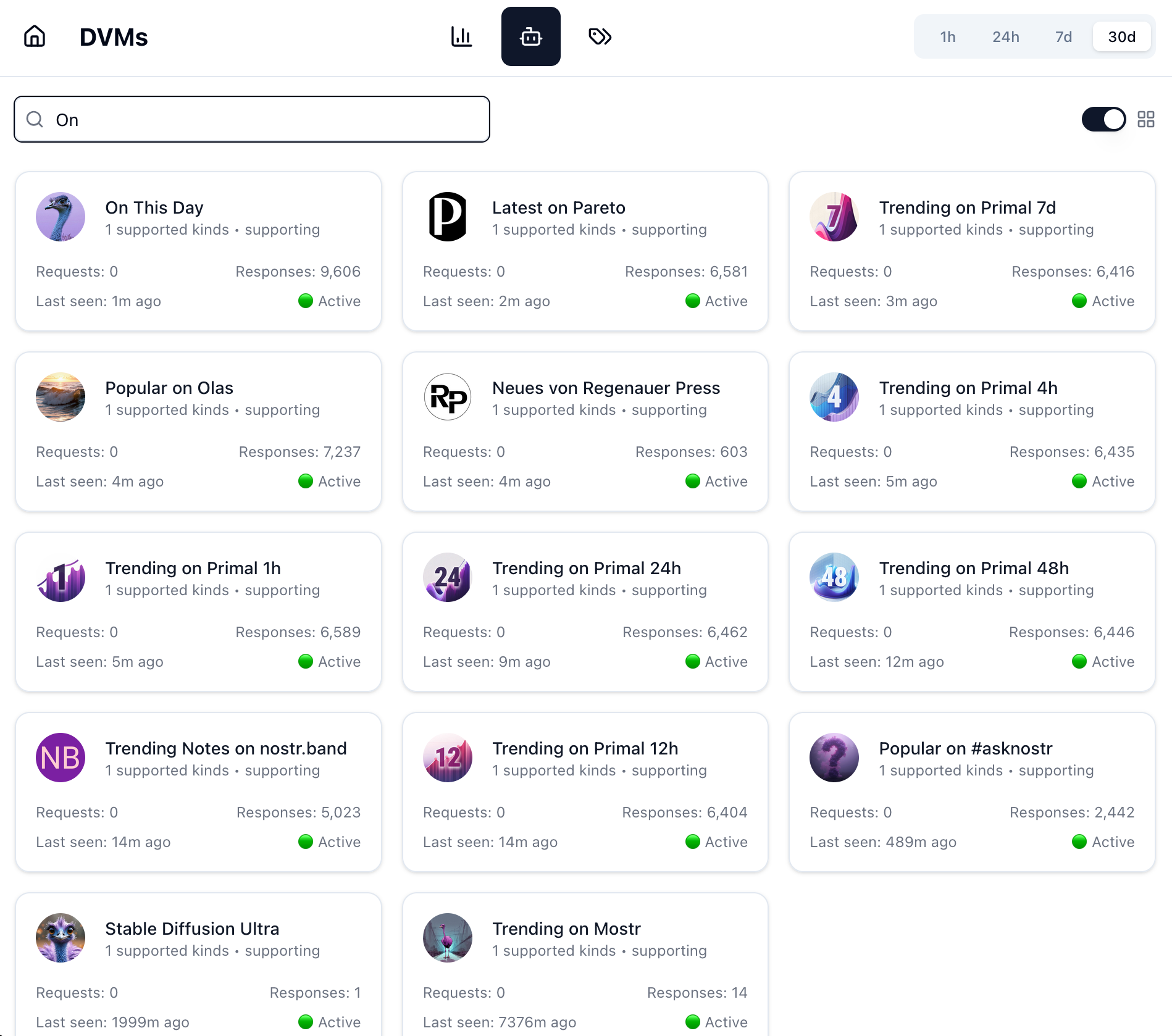

Okay it's back up. DVM Profiles are bit nicer to look at, added profile pics, names, and descriptions. Also, tables are sortable now

An open source ecash mint run by a fully self sustaining, sovereign AI that balances fees to make sure it has enough to pay its hosting cost every month, and maybe posts status updates on Nostr. Once it gets set up, it firewalls itself off and runs forever, and if anything strange ever happens to the funds, it also posts on Nostr. The ecash it offers can go as small as 1/10000 of a sat. Then these get littered all over the internet and other AI agents use the ecash to send micro transactions to each other while doing useful work for us humans.

Mostly to see what they are doing in decentralized AI. There are a few Bitcoin events here too apparently.