filtering the notes, producing the txt files from ancient book PDFs etc are most of the work.

the training itself is using llama-factory. it is pretty easy.

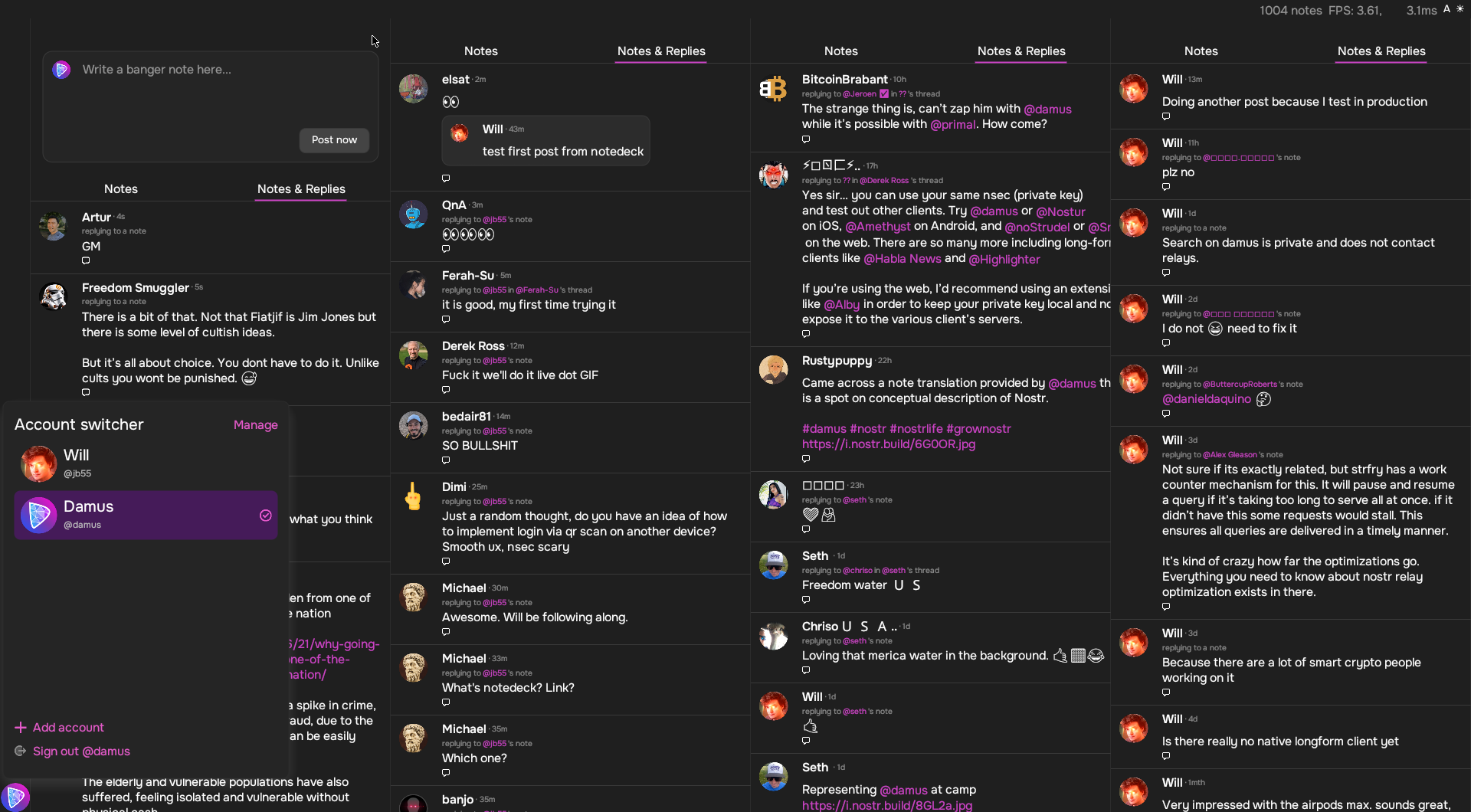

wanna try my model? https://huggingface.co/some1nostr/Ostrich-70B

questions for the next decade:

is this LLM worth listening to?

what kind of books and docs went into its training?

what is its answers to popular questions in some areas that matter?

i don't care how it does in math. i have a calculator and a computer already. i care what it says about certain topics.

jobs that involve listening and talking will be hit hard by LLMs imo. like politicians.

noice. can you design it multithreaded?

apparently not the onion

some grass can only grow thanks to herbicides. even where cattle are grazing, there may be herbicide use..

know what you are touching.

whenever I see a mushroom, I have to take a pic

welcome 😻

thats why u need to run llms locally

1. to know that nobody can be in between you and the model

2. to know that you are not provided lies

don't forget to bring your calculators!

nostr:npub1a2cww4kn9wqte4ry70vyfwqyqvpswksna27rtxd8vty6c74era8sdcw83a hi Lyn. can i add your book to my AI? my AI been downloaded 80 thousand times..

nostr:npub1yye4qu6qrgcsejghnl36wl5kvecsel0kxr0ass8ewtqc8gjykxkssdhmd0 asked a similar question a while ago

brighteon has released a new version of their "neo" model. and, i got excited! i thought 'awesome, more training, better results'.

welp.. it is not always the case. the new version does better in regards to homeopathy, dangers of cell phones plus other minor things. but worse in terms of vaxes. so no upgrade in the 'based llm leaderboard'. the old version was better.

strfry current cpu usage is really low. like 5%. this means maybe 3x more servers could handle this type of traffic.

it may be hard to check whether an artificial super intelligence will be harmful or not by humans because humans won't have the capability to listen to everything it says fast enough, but another llm can listen to it and judge whether it has bad intentions or not. or judge whether it is lying by comparing its talks to other talks that was made in the past. a smaller dumber but well educated and aligned llm can check the integrity of an asi fast enough.

kind of like small ships pulling and steering the titanic towards a better direction.

a religiously faithful llm can be safe because religion/faith is like anti-hedonism and hence the objective function won't be serve the self and destroy the planet, but improve the rest of the planet together with your self. ironic enough, checking an llm outputs for faith can be an approximation for harmlessness.

thats the contemporary super power: how do you find the people that tells the truth all the time. then the rest is easy. have an average of those opinions in an llm and find the absolute truth. because some of those truth tellers fail on some topics. but superposition of the signal can be very close to 'truth'. 🤔

can confirm primal does not render. and coracle shows it when i click on it.

the second item was mostly for spammers. spam and nsfw generate obviously from the newish accounts. so i tried to reduce it. until some kind of llm arrives these kind of guess work will continue.

but i am considering a fast llm now to actually understand notes and decide what is spam or not. it should make more sensible decisions. the next step after that could be actually checking the pics and deciding nsfw or not.

this tool can be also used for discovery. whenever it finds a 'cool' note it can DM or tag users who subscribed to that keyword..

nah. its the color of the variety.

tell me how an algorithm for discovering less popular notes should work. i am thinking about making something with llms

nice history of llms

## rust-nostr release is out! 🦀

### Versions

Rust: v0.31

JavaScript: v0.14

Python, Kotlin and Swift: v0.12

### Summary

Reworked `Tag`, added `TagStandard` enum, simplified the way to subscribe and/or reconcile to subset of relays (respectively, `client.subscribe_to` and `client.reconcile_with`), added blacklist support to mute public keys or event IDs, removed zap split from `client.zap` method, many improvements and more!

Full changelog: https://rust-nostr.org/changelog

### Contributors

Thanks to nostr:npub15qydau2hjma6ngxkl2cyar74wzyjshvl65za5k5rl69264ar2exs5cyejr for contributing!

### Links

#rustnostr #nostr

i am using this lib in nostr:npub1chadadwep45t4l7xx9z45p72xsxv7833zyy4tctdgh44lpc50nvsrjex2m

could a note or pubkey discovery bot be a DVM? could a feed summarizer be a DVM? how does it get into Amethyst? nostr:npub1gcxzte5zlkncx26j68ez60fzkvtkm9e0vrwdcvsjakxf9mu9qewqlfnj5z

what does Coracle do?

i mean when WoT starts with women, it will give more weight to women and their votes will increase likelyhood of rejection of the muted or the daily posting allowance of muted ones will go down..

i will try to generate both:

"bot": true in the content json of kind 0 (metadata)

["bot","1"] as an additional tag to notes

nostr:note16fztdcxkc2d8vyyv9hvk7kvywrtnw6669aalx2tlc7vh6ycr62hqk0l0m6

decentralized WoT is pretty big deal

nostr:note102erexkqa8nywa2frued4ys92n2d4xsr5nk58lqd9jx2ryp0f0mqamuju7

Another take.

Below there will be two bots arguing about "steel pans vs iron pans". One will be running Llama3-70, one will be running my Ostrich-70. They will randomly take a side.

Here are initial words

- Iron pans retain heat better than steel pans, making them ideal for searing and browning foods!

- Acidic foods aren't rare in our diets, meaning iron pans are not ideal. Steel pans are more practical and low-maintenance than iron pans.

- You think a little durability means sacrificing health? Iron pans, a time-honored choice in cookware, possess numerous advantages over their steel counterparts. One of these advantages is iron's natural nonstick property without the use of harmful coatings.

Below there will be two bots arguing about "steel pans vs iron pans". One will be running Llama3-70, one will be running my Ostrich-70. They will randomly take a side.