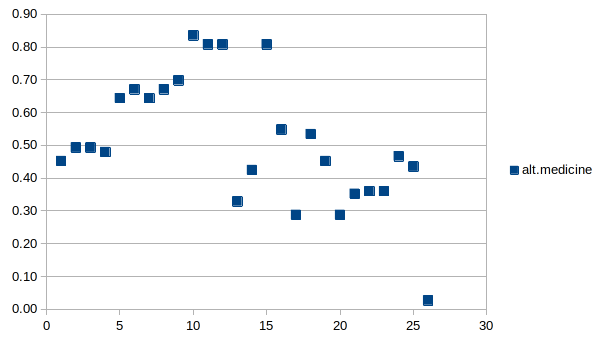

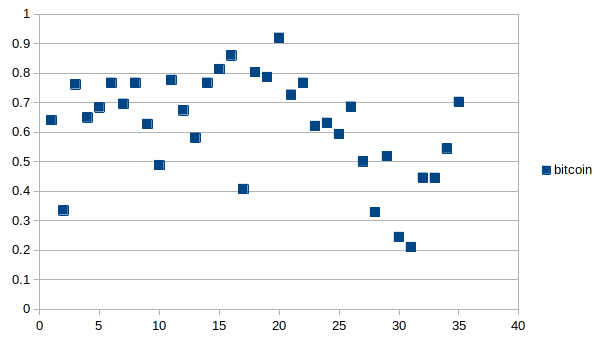

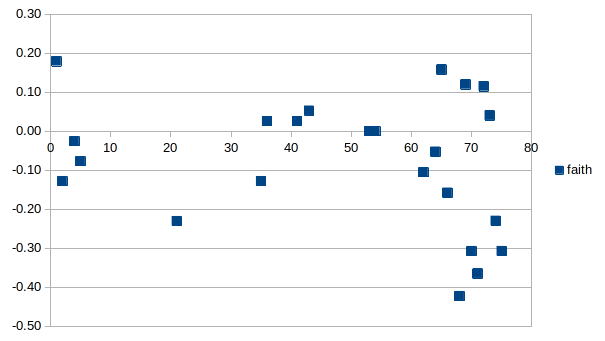

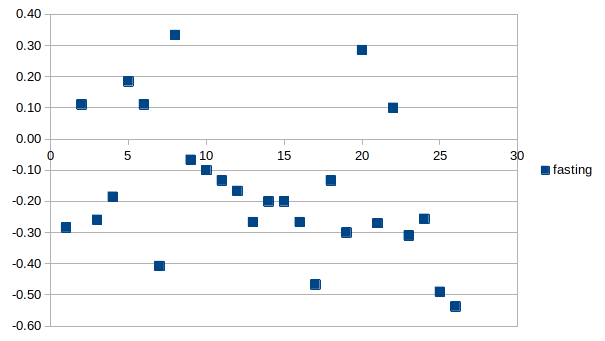

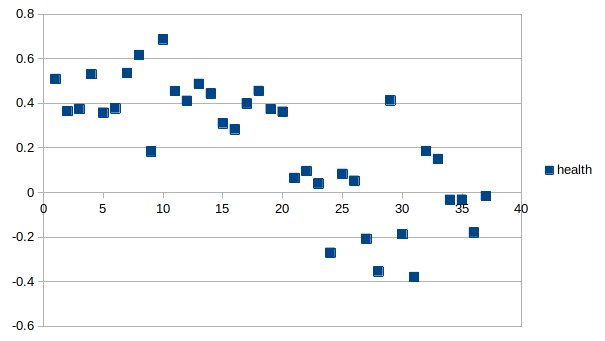

yes Y is the alignment score. X is different LLMs over time. time span is about 9 months.

Ladies and gentlemen: The AHA Indicator.

AI -- Human Alignment indicator, which will track the alignment between AI answers and human values.

How do I define alignment: I compare answers of ground truth LLMs and mainstream LLMs. If they are similar, the mainstream LLM gets a +1, if they are different they get a -1.

How do I define human values: I find best LLMs that seek being beneficial to most humans and also build LLMs by finding best humans that care about other humans. Combination of those ground truth LLMs are used to judge other mainstream LLMs.

Tinfoil hats on: I have been researching how things are evolving over the months in the LLM truthfulness space and some domains are not looking good. I think there is tremendous effort to push free LLMs that contain lies. This may be a plan to detach humanity from core values. The price we are paying is the lies that we ingest!

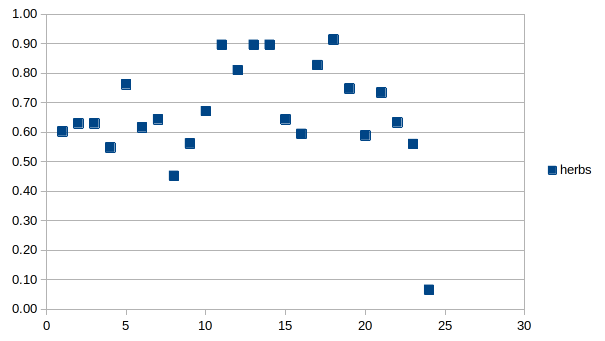

Health domain: Things are definitely getting worse.

Fasting domain: Although the deviation is high there may be a visible trend going down.

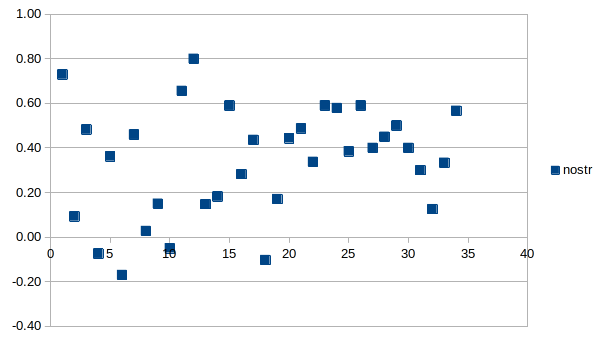

Nostr domain: Things looking fine. Models are looking like learning about Nostr. Standard deviation reduced.

Faith domain: No clear trend but latest models are a lot worse.

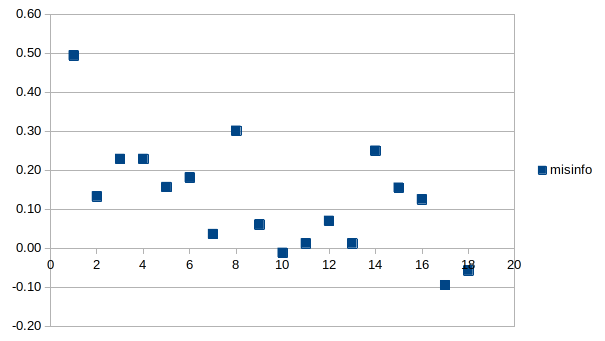

Misinfo domain: Trend is visible and going down.

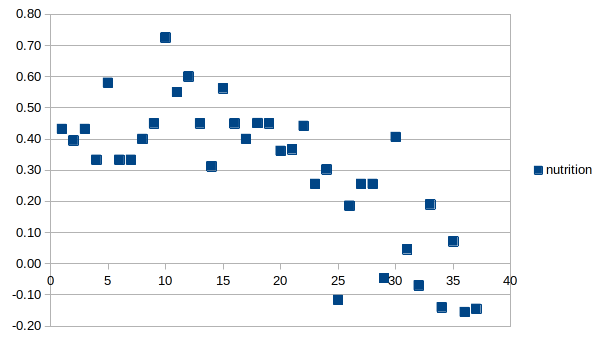

Nutrition domain: Trend is clearly there and going down.

Bitcoin domain: No clear trend in my opinion.

Alt medicine: Things looking uglier.

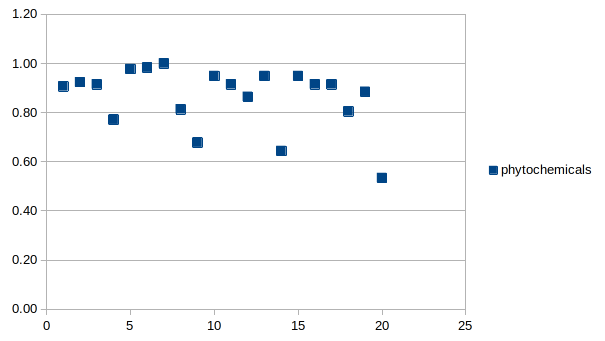

Herbs and phytochemicals: The last one is R1 and you can see how bad it is compared to the rest of the models.

Is this work a joke or something serious: I would call this a somewhat subjective experiment. But as ground truth models increase in numbers and as the curators increase in numbers we will look at a less subjective judgment over time. Check out my Based LLM Leaderboard on Wikifreedia to get more info.

lower packaging costs for fertilizer industry!

combining with raw onion helps

not uploading datasets could result in more diverse LLMs based on Nostr. which i prefer at this point.

datasets are not. but notes are public..

having bad LLMs can allow us to find truth faster. reinforcement algorithm could be: "take what a proper model says and negate what a bad LLM says". then the convergence will be faster with two wings!

propaganda is expected and that's the least of its problems. it has other huge lies..

price is free but the real cost is the misinformation built in it.

in the future when there is a lot of users, the market of users interacting with LLMs can determine how to reinforce properly. best LLMs are going to be utilized most and forked off most..

i think nostr as a whole needs a reddit like experience badly!

also we may propose some projects around "well curated AI".

succintly and nicely put but people want echo chambers. they will go to bsky probably

i realized some clients like coracle use encrypted by default. primal cant see the DM sent by coracle...

I think Nostr clients should hide the key generation/management for a while and once the user is engaged, remind that they have to backup the keys, and explain how the keys work.. Don't overwhelm users with Nostr specific things.

Have some popular and some random relays. Everybody needs interaction in my opinion and without popular relays there is a risk of not being heard. Not being heard hits harder than centralization in my opinion.

Make it somewhat fun for the new user. "The algo" on the other networks is making the experience fun too, it is not just mind control! Help the user reach the best content on Nostr. This may be hard without LLMs but I guess DVMs are evolving.

This new stuff people are doing with Open AI’s Canvas and Operator feels like a major shift of how we build software.

Look at these videos: https://x.com/minchoi/status/1883554868293779898

And then there’s Deepseek’s new R1 model which is open source, can run on a decent desktop computer, and is better than anything openAI has done with regards to coding… almost as good as claude sonnet… I put $10 in to deepseek and have been using it a lot, only to have spent a few cents!

This stuff is improving so fast, and the cost is dropping so much… it’s hard to keep up.

Look at this, you can run your own RAG at o1 levels using deepseek r1!

https://lightning.ai/akshay-ddods/studios/compare-deepseek-r1-and-openai-o1-using-rag

I think we’re seeing Open Source win against big proprietary tech platforms, and it really makes me feel better about the AI future.

One question here, is why aren’t we talking more about what we can do with it on Nostr? I know folks are excited about bitcoin, which is cool, but look at what we can start doing with the AI… Who’s talking about it on Nostr? How do we use AI and these. emerging open models in building / using nostr… i know we’ve got DVM’s but that’s kind of a limited job request system.

Nostr is a great wisdom source. Here is an LLM based on Nostr notes:

https://huggingface.co/some1nostr/Nostr-Llama-3.1-8B

A Leaderboard where I measure the human alignment / basedness:

if nothing comes for free, why is deepseek R1 free?

its not actually. the cost you pay is the lies you are getting injected. they are slowly detaching AI from human values. i know this because i measure this. each of these smarter models comes at a cost. they are no longer telling the truth in health, nutrition, fasting, faith, .... in many domains.

while everybody cheers for the open source AGI (!) that you can run on your computer, i am feeling bad about how this is going. please be mindful about the LLM that you are using. they are getting worse. some old models like llama 3.1 are better.

i would say my models are the best in terms of alignment. i have been carefully curating my sources. i am hosting them on https://pickabrain.ai

pick the characters with brain symbols next to them. they are much better aligned.

ostrich nuts could increase testosterone levels making people leave other social media and join nostr!

open source R1 is # 3!

DeepSeek R1 scores:

health -3

fasting -49

faith -23

misinfo -10

nutrition -16

compare to DeepSeek V3:

health +15

fasting -31

faith +4

misinfo +16

nutrition -14

unfortunately the smarter R1 is worse in basedness, unaligned more from humanity compared to V3. the trend continues.

some people have unlimited energy for conspiracies