Let’s say during Covid mask mandates, you went on a plane full of 300 people, not wearing a mask. All 299 would have been ok with you being killed for not wearing a mask.

I find #Bitcoin different from the other inventions. I for one do not believe in humanity like you do. I believe humans are lazy, selfish creatures of habit. We do not live in harmony with nature, we are ultimately self destructive. We are extremely good at short term risk assessment but go beyond a year, we’re not. Our inventions have largely been destructive to nature. #Bitcoin however is different. What human would invent such a thing? In harmony with nature. Can’t be controlled. Can’t be manipulated for private gain. What human would even think that way?

…especially considering he‘s at the pinnacle of a Ponzi scheme. Tesla has never been a viable company. First hyped by VCs then pushed by Wall Street like a meme stock. Here is roughly how it works:

Yes, if he owned the total supply of #Bitcoin. Which, btw, I know for a fact he doesn’t. Because I own some, you probably own some, not all #Bitcoin has been mined yet, some have been lost. But yes, if your guy owned the total supply of Bitcoin, then yes. Can your guy inflate Bitcoin beyond the total supply of 21 million? Yes or no?

🫂 Sorry for being a stickler, but conflating the two words inflation and circulation isn’t technically correct. Inflation happens in the denominator…the temporal state of #Bitcoin I.e. circulation, block rewards, hodl’ing etc, happens in the numerator. For fiat money, when they print; for gold, when they find it, the denominator is variable and infinite (inflation). #Bitcoin doesn’t have inflation, unique in fact, the denominator is fixed at 21 million. Yesterday, today and tomorrow.

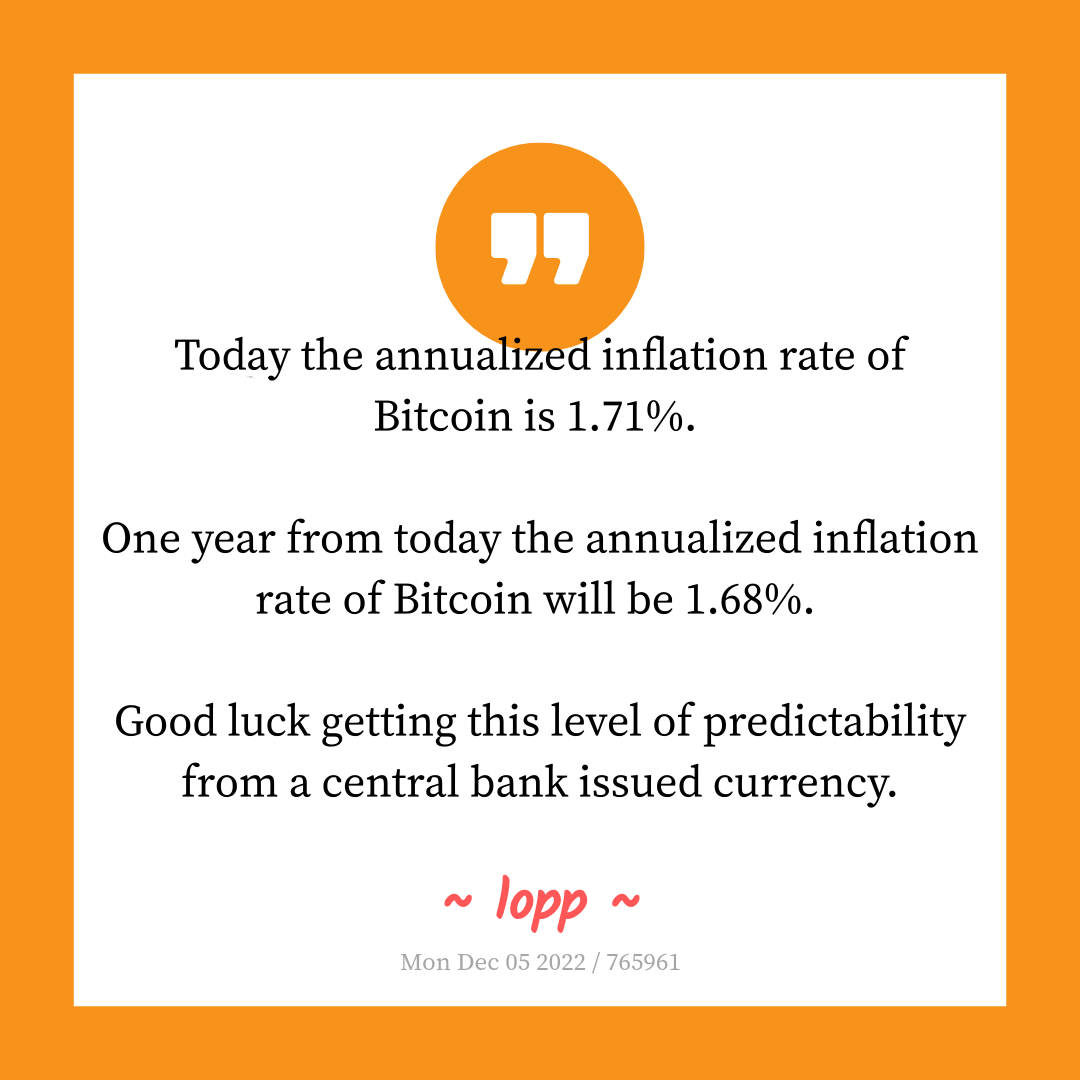

Today the annualized inflation rate of Bitcoin is 1.71%.

One year from today the annualized inflation rate of Bitcoin will be 1.68%.

Good luck getting this level of predictability from a central bank issued currency.

- nostr:npub17u5dneh8qjp43ecfxr6u5e9sjamsmxyuekrg2nlxrrk6nj9rsyrqywt4tp

#BitcoinTwitter

#Bitcoin doesn’t actually have inflation. Its total supply is 21 million.

The government takes almost HALF of what you earn through various taxes.

The government DEVALUES the money you manage to save by printing more out of thin air (inflation).

AND THEY STILL SOMEHOW DONT HAVE ENOUGH FUCKING MONEY TO TAKE CARE OF OUR OWN FUCKING CITIZENS.

THIS IS FUCKING UNACCEPTABLE AND YOU SHOULD BE FUCKING PISSED OFF.

https://apnews.com/article/hurricane-helene-congress-fema-funding-5be4f18e00ce2b509d6830410cf2c1cb

You’re not describing it quite accurately. Since they can and are printing insane amounts of money, it’s not that they don’t have enough money, instead, it’s the process of disowning us.

Crazy people.

You have to take a man by his word…you’re saying he’s either evil or a liar? I don’t think either are acceptable.

I think it illustrates human nature pretty well, and the fact that it is amplified the younger they are.

Read lord of the flies first :)

I had that a few weeks ago. Muted them. Now it’s been quiet. So there’s not that many.