CC: nostr:nprofile1qqsrhuxx8l9ex335q7he0f09aej04zpazpl0ne2cgukyawd24mayt8gprfmhxue69uhkcmmrdd3x77pwve5kzar2v9nzucm0d5hszxnhwden5te0wpuhyctdd9jzuenfv96x5ctx9e3k7mf0qydhwumn8ghj7un9d3shjtnhv4ehgetjde38gcewvdhk6tc4rdlnm, while searching for the Nostr logo, I found several repos, Figma designs and websites. Is there an "official" one?

I think there’s no official one as such.

It’s a good point. nostr does seem like the perfect platform for social gaming.

The problem comes when you have to modify something that the AI built. If nobody knows how it works, it’s basically useless, right?

I haven't tried to set one up locally, easier probably to do it on a vps to which you can point a domain name. I've had luck with #nostream using their docker setup.

Progress seems to involve a lot of Websocket subscriptions that might or might not suddenly go silent.

I give a 25% - 50% chance however that at some point in the next few years, there will be an act in the U.S. Congress, or a big court cases, that reduces the ability of Google and Apple to censor apps. There's also the "progressive web app" thing which so far, as I can tell, is a disappointment, because you don't get access to the APIs you really need -- but it's possible that this matures into something more powerful.

This is why rizful.com is web-only. Going through app-store approval is just not something I can do with my life anymore.

Is it possible the big dip below 80k was the result of north korea fire-selling 1.5b in ETH after the hack? Or is 1.5b small change and not actually able to move the market like that?

We need a marketing campaign to get normie influencers onto ##nostr and get them 100k sats in zaps the first day they get on.

nostr:npub1xtscya34g58tk0z605fvr788k263gsu6cy9x0mhnm87echrgufzsevkk5s This is what most images look like to me in #damus before I tap on them.

nostr:npub1xtscya34g58tk0z605fvr788k263gsu6cy9x0mhnm87echrgufzsevkk5s trying out Damus again on iPhone. Finding all the images - or most of the images - are blurred until I tap on them. Is this related trying to block shocking/bad images or is it some other thing about lazy loading or similar?

This behavior might not work for normies…. when they use an application, they have to start seeing images within about 500ms or they will get bored and go back to using Instagram. It’s a lizard brain thing.

Also going for a whale watch with the boys. And bike ride in the holllywood hills.

Trying to decide on a server side nodejs state management strategy.

Highly recommend you test it with nostr:npub1yxp7j36cfqws7yj0hkfu2mx25308u4zua6ud22zglxp98ayhh96s8c399s .... https://supertestnet.github.io/nwc_tester/

nostr:npub1l5r02s4udsr28xypsyx7j9lxchf80ha4z6y6269d0da9frtd2nxsvum9jm Probably knows both the answer and the ultimate solution if it is blocked.

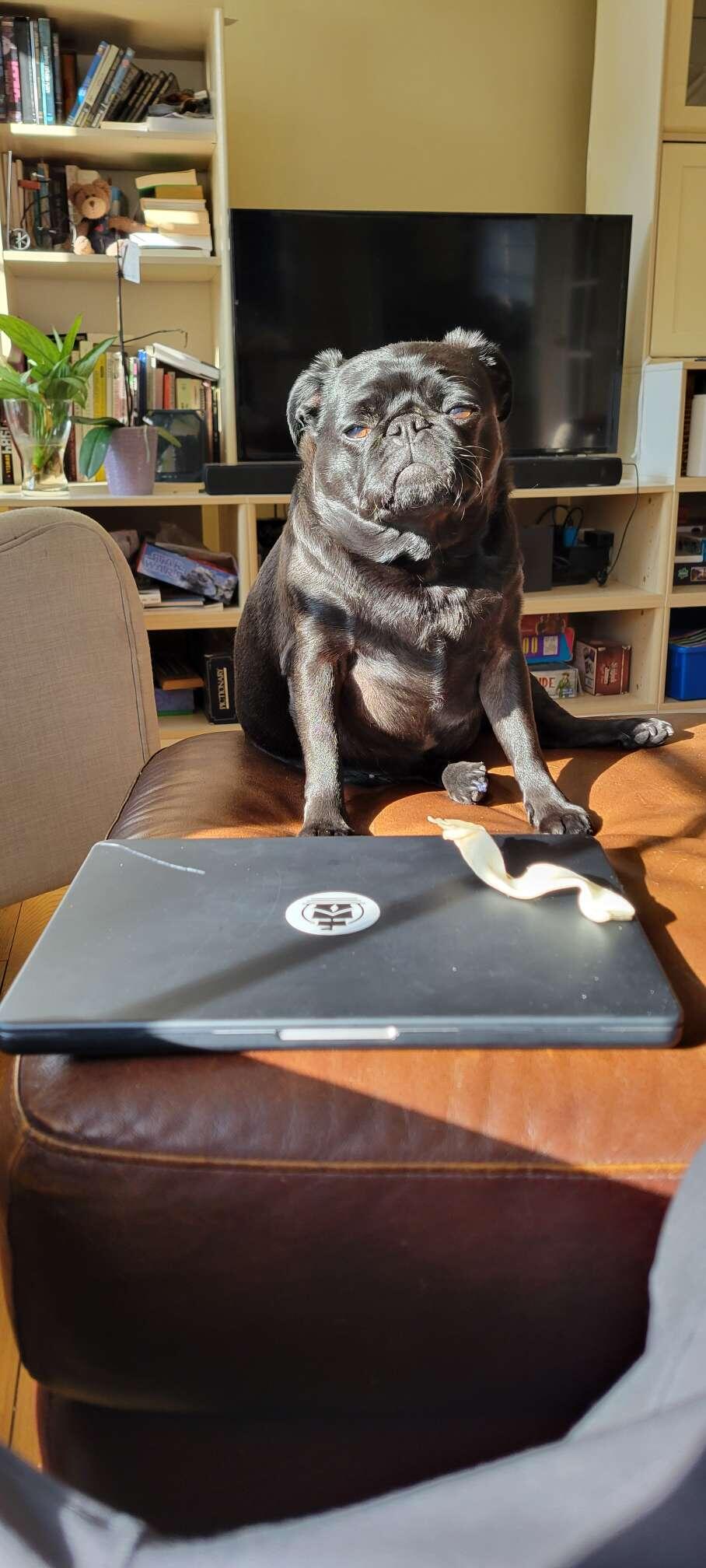

You running Linux on that laptop? Have you found a particular laptop linux setup that you like? Drivers and sleep are what I'm afraid of....

So we have been in the trenches with this issue for a few months. We're currently using #nostream -- note that you'll need to make a lot of changes to the configuration file to change various "rate limiting" limits -- it's quite tricky because you probably won't know that you've hit these limits until your users actually start using nostr:npub19hg5pj5qmd3teumh6ld7drfz49d65sw3n3d5jud8sgz27avkq5dqm7yv9p a lot! .... But that's not it -- the challenge actually that we have been having is KEEPING THE CONNECTION ALIVE between our server and our relay -- that's actually our development focus right now. It's tricky because these websocket connections don't last for ever. You really I think need to set up constant monitoring - we have quite complicated monitoring in place with a demo NWC user, who makes a 1 sat payment every few minutes, and then we get alarms if it fails..... I think there is generally a need for some BEST PRACTICES to be established with LONG LIVED connections to relays.

Oh, and yes, in your #nostream configuration file, you will want to disallow probably some KINDs, so your relay only deals with NWC stuff. But again, this is not straightforward, I think that some clients won't use your relay if you limit the kinds too much?

Yeah nostr:npub1yzvxlwp7wawed5vgefwfmugvumtp8c8t0etk3g8sky4n0ndvyxesnxrf8q is quite nice for tablet use.

you might be better off just closing and re-opening channel(s) .... see here: https://docs.megalithic.me/running-a-full-node/do-need-to-rebalance-channels

Trying to balance public Lightning channels on LND, an experience full of challenges and surprises! ⚡️ More information,check out the article,written by nostr:npub1xzuej94pvqzwy0ynemeq6phct96wjpplaz9urd7y2q8ck0xxu0lqartaqn

nostr:naddr1qvzqqqr4gupzqv9enyt2zcqyug7f8nhjp5r0skt5ayzrl6ytcxmug5q03v7vdcl7qq2k75jxv4p5zvrt2dk8qaz8w9krwje4tf0k582ualj

I actually noticed at least with nostr:npub1yzvxlwp7wawed5vgefwfmugvumtp8c8t0etk3g8sky4n0ndvyxesnxrf8q -- that uses quite a lot of browser memory -- that Brave was MUCH slower than Chrome. I think Chrome does some magic memory management stuff that they aren't sharing with Chromium.

I was a long-time Brave user, but, really, if they are trying to pump altcoins and other nonsense, can you really trust them?

To get to the next stage of price with Bitcoin, where it goes to $150k and beyond, we first need something to break in the broader economy, and the US government to go all-in on stimulus. The odds of this happening at some point in the next 5 years is 100%. The odds of this happening in the next 6 months are also fairly good, but not 100%.

I don't think you can. Anyone could build a service that crawls the internet, finds 1m domains, and quickly checks them against your list of hashes, and they'd then have a (very accurate!) list of exactly which domains are offering CSAM.... they could even build a website that just links to all the domains!!

Because CSAM is a serious issue, a really serious issue, I don't think ANYONE can take the risk of possibly publishing the actual URLs and scores (or even domains and score) publicly.... right?

Yes. It's a good idea. For our image scoring service, we're actually storing the hashes of the URLs instead of the URLs. And I thought, what if we could PUBLISH the hashes of the URLs instead of the URLs themselves... But the problem is that hashing a URL takes less than a millsecond, so it would be trivial to "construct a porn map of nostr" if we provided the hashes, right?

also posted this to the Github thread: https://github.com/nostr-protocol/nips/issues/315#issuecomment-2688964871

My understanding is that we can't ANY public blacklisting of domains or urls...., because, publishing public events with domain scores would essentially be providing a "public map of naughty content on nostr".

If we publish "domain blacklists" or "url blacklists".... then there's no reason that clients couldn't use it in the OPPOSITE way that we intend, i.e., purposely assembling notes which contain images with "bad" scores.

Now, if only porn and violence were involved, there's not a huge problem, but, there's always a chance that we might be issuing bad scores to domains because of CSAM that has been caught with high scores.... and you definitely can not, and should not, ever publish a list of URLs or domains that are distributing CSAM!

So unless I am missing something, there is no feasible way to PUBLICLY publish domain or URL scores.

However, each individual application (a relay, or a client-facing application like Yakihonne or Coracle or Primal or Damus), definitely COULD assemble its own array of "domain scores"..... and this brings me to my second point...

In my proposal, I assert that "image scoring" is CPU and GPU and Bandwidth-intensive.

For nerds like us, we know what that means. Normies, on the other hand, have no idea what that means -- but they do have an EXTREMELY KEEN sense of something else: LATENCY.

A normie who loads a Nostr app, where the notes take 5 seconds to load, because of some image scoring that is going on.... they're not going to wait 5 seconds -- they're going to go back to being abused by Facebook or Instagram -- it's well-known that for normies, PAGE LOAD SPEED is much, much, much, more important than any other factor in how they make decisions about what to do on the internet. It's a lizard-brain thing.

So I think that:

We need to score images. I don't want to go to the #asknostr tag and see horribly psyche-scarring images/video.

We need to use lots of clever techniques to ASYNCHRONOUSLY score images, before such time that our user attempts to load a note. When our user wants to see a note, we want the image score to be pre-computed.

Individual applications (i.e., individual relays or client-facing Nostr apps).... after scoring, say, 1000 images from a domain, could ALSO add that domain to a PRIVATE blacklist... and this will help them provide pre-scored notes to their users on a low-latency basis, because, once they have a domain on a blacklist, maybe they can STOP scoring images from that domain and just reject them.

But: since I believe we cannot safely PUBLISH blacklists (for the safety reasons described above), each application MUST assemble their own scores, in order to assemble their OWN blacklists (and potentially whitelists).

That's why I think our API, and hopefully a handful of competing APIs, might be the only real solution to allow Nostr client applications (and relays) to assemble their own scores.... Because, for the reasons outlined in my proposal, it's not feasible for 100+ relays and client applications to compute their own scores independently... these scores really should be a shared (but not publicly published!) resource....

seems like a lightning transfers network enabling company? https://docs.amboss.tech/

i'll dig into it .many thanks!

oh, I just sent that link to show you the BitcoinVN node... the data about that node is show on the amboss.com website.... but what I was trying to send was about BitcoinVN, not amboss!

If you want to get technical, you can run your own models, but you basically need to have GPUs or quite a new and fancy mac...