Our IT guy at work likes synology. He gave me one of his older systems and it works well. Also using in conjunction with Tailscale

Maybe I’ll make the LLM write it actually 😂

I’ll see what I can do this weekend… looks like I wasn’t first to try it! https://www.reddit.com/r/ObsidianMD/s/TabHNqGv6Y

I am running it using deepinfra as the LLM provider right now but I can probably do a write up for both options

nostr:npub1vwymuey3u7mf860ndrkw3r7dz30s0srg6tqmhtjzg7umtm6rn5eq2qzugd nostr:npub1h8nk2346qezka5cpm8jjh3yl5j88pf4ly2ptu7s6uu55wcfqy0wq36rpev I think you would both like this.

Basically a way to work with your vault notes and feed them to an LLM as context to make it generate new notes or summarize your existing notes. Continue.dev can run with a provider or with a local LLM if you have one running in an ollama docker container. I haven’t experimented with letting it autocomplete or create templates for me yet, but the potential productivity gains seem massive.

Anyone ever used something like continue.dev in VSCode to point an LLM at their Obsidian vault?

I’m going to try it today and I have high expectations.

This isn’t actually just a funny joke?

I’ve spent the last couple days digging into providers that I can use with work and deepinfra is incredible. It’s shocking how bad the data privacy terms of the OpenAI API is compared to deepinfra. At first I was confused why they don’t have as many models as OpenRouter, but I imagine a lot of it comes down to being able to maintain their terms of service that are user friendly. Thanks again for sharing!

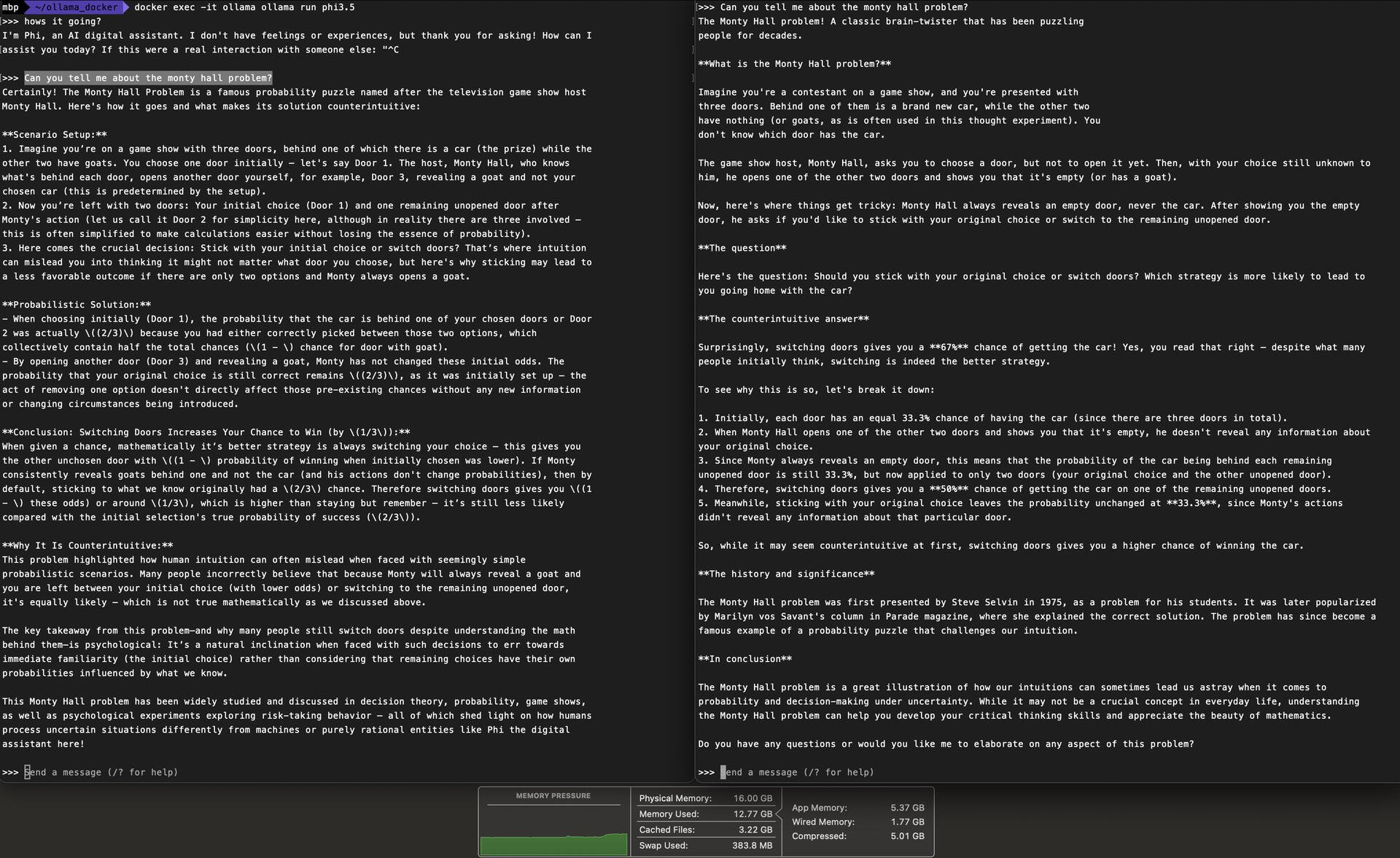

FYI after quite a bit of testing I settled on qwen2.5 coder 1.5b for autocomplete and llama 3.2 1b for chat. These models are tiny, but bigger models had too poor of speed performance on an M1 laptop for daily use. I’m sure the results will pale in comparison to larger models, but it is certainly better than nothing for free!

I will say that the prompting from continue in the chat does seem to add quite a bit of a delay. Autocomplete is responsive, but chatting is noticeably slow.

I am running the lightest weight version of most of these models so you might see a big downgrade from something like Claude. Doing some testing right now between llama3.1:8b and phi3.5:3B. RAM usage at the bottom. Also have deepseek-coder:1.3B running at the same time. Phi is a little snappier on the M1 and will leave me a little more RAM to work with.

Very new to exploring this. I’ve used ChatGPT and that’s about it. So far using ollama through docker has been great for running locally. Mostly testing small open models >=8b parameters.

Will see how these small models do on my M1… I imagine they will give me enough of a boost that I won’t choose to pay for something.

What’s your experience been like?

Mind blown after getting Continue set up with ollama in VS Code. Can’t believe I didn’t do this sooner. nostr:note175s33lj2exe52m86pm6qwwzfx6cd3s668pthk9muw2z3xu8uekjqm3d9ch

Very helpful! Investigating both for work where we have great hardware and for home so this is great to know

Now that OpenAI is officially VC-funded garbage, what are the best self-hosted and/or open-source options?

Cc: nostr:npub1h8nk2346qezka5cpm8jjh3yl5j88pf4ly2ptu7s6uu55wcfqy0wq36rpev

As new host of nostr:npub1fpcd25q2zg09rp65fglxuhp0acws5qlphpg88un7mdcskygdvgyqfv4sld, I'm going to need some help! I'm looking for a volunteer who can manage our social media accounts. If that's you, please send me a DM!

Thanks for taking over! I’m not sure how or where I can help out, but I’d like to if I can. Sending a DM

If you haven’t taken the care and time to make a BLT like this then you haven’t lived. Made it with heirlooms from the garden for the first time and it was even better than usual

#foodstr

That makes more sense to me. I guess I didn’t understand the “in their comfort zone” comment

This is a weird take since coracle and amethyst are consistently pushing boundaries by implementing the latest nips…