There is this sci fi children's book series called Animorphs. Teens get the power to transform and embody themselves as an animal, take on their features and instincts. On the surface, seems nice - but they're using these powers to fight a war against aliens. As the story goes on, you read how the character personality and mentality changes as a consequence of war, and how they face themselves as a consequence of their actions. Pretty brutal series looking back, would love a more detailed expansion into this universe and its themes.

https://www.grunge.com/48686/animorphs-traumatizing-kids-series/

Meanwhile, in another discussion:

"They're made out of meat" 😮

And by that, build systems that enable effective learning, adapting as the information landscape changes.

you win the game when you're the first to say and say it like you're genuinely confused, waiting for an applause

"well, yeah.. but what if we didn't?"

nostr:nprofile1qqs06gywary09qmcp2249ztwfq3ue8wxhl2yyp3c39thzp55plvj0sgprfmhxue69uhhg6r9vehhyetnwshxummnw3erztnrdakszxmhwden5te0w35x2cmfw3skgetv9ehx7um5wgcjucm0d5q3camnwvaz7tmgda68y6t8dp6xummh9ehx7um5wgcjucm0d5ahp0n0 if you care to listen to the video game version of your narrative 😉

I'd say its about time someone did! We riot today! 😡

I remember the Static Shock x Batman Animated Series/Batman Beyond/Justice League episodes we're 🔥

Feel a spider will take your shoe and then start smacking you with it!

The album commentary is also such a treat! 👏

Some would call it metamodernsm. Some ideas along the train of thought, asking: "what kind of spiritual and religious values should we take since modernism has essentially tried to explain away and make insignificant the traditional notions of religion and spirituality?"

I'd naievly relate it to how some individuls don't hear in their dreams, they just see in images. The specific type of processing doesn't matter, so long as the resulting behavior is compatible.

No. Move closer toward order. Life is able to decrease entropy, manifesting an order that persists, and strives to continue, increasing more pockets of decreased entropy. The larger the system, the more order is needed.

You going for 100%?

Should probably do it more often, even if it doesn't have the weight of a quartly meeting, if the after meeting was any indicator of how it went 😆

And from 1968 - "The Mother of All Demos"

Now that OpenAI is officially VC-funded garbage, what are the best self-hosted and/or open-source options?

Cc: nostr:npub1h8nk2346qezka5cpm8jjh3yl5j88pf4ly2ptu7s6uu55wcfqy0wq36rpev

Anthropic, Perplexity is still in good sights with me. Otherwise, maybe Ollama could do what you need.

The poison is the dose and frequency. Usage can bring higher doses and higher frequency. We all need to figure out the healthy place to sit in. For vices, zero can be quite healthy.

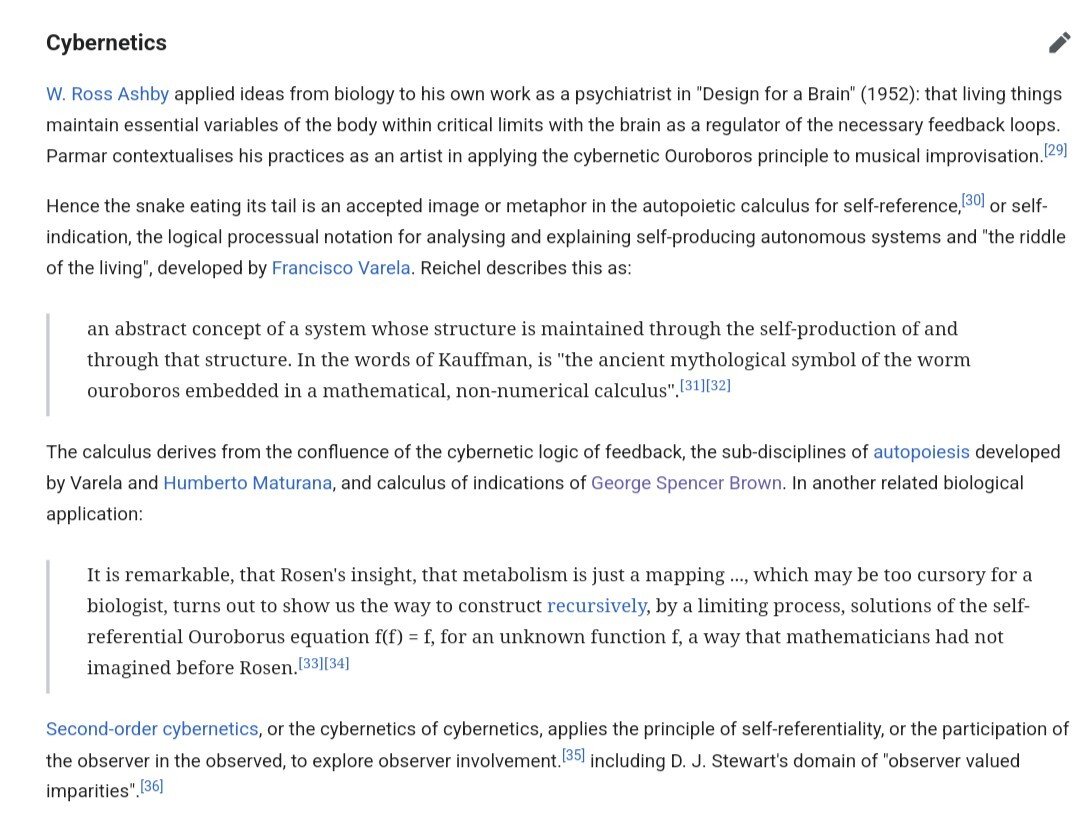

the formula is a short hand for a dynamical equation, like the ones from fractals,

`f(t+1) = a*f(t)`, "the next state is dependant on what happened in its previous state"

With `f(f)=f` its more mind bendy than that. f is a function, which takes itself as an input, and produces itself. For something to be a live (living as a process, alive as a state), it must come from something alive (if you subscribe to a specific definition of life). It itself is the origin.

Sparked from a section of the article.

More than cyclical. The object's existence is dependent on its own self production. For the object to be, it must already have been.

I get the hype aversion. I get the 'technology' aversion - but if you want to recognize handwriting in an image, you're not going to do it by explicitly building rules from the ground up, it just doesn't work that way.

"All" machine Learning" and AI (deep learning) is, is a defined process to minimize errors, and it uses numbers to do so. The specific numbers are essentially the rules that have been 'found' by the algorithm that minimizes error, which you don't necessicarily care about because you just want the right answer.

You could simpify all of AI and ML algorithms to playing advanced games of "hot and cold".

- You have a bunch of pixel values that represent text,

- You make a guess "this looks a lot like an L, an i, or 1, but it looks most likely to be a 7, so I'll say that this image is a 7"

- turns out the correct answer was "T" but they write like a chicken, so I'll have to make an adjustment for next time and hopefully be correct then.

Tool is they key word. So long as it can be used in a way that is meaningful to others, it will be used. I won't make any claims that AI can bring truth anymore than a pencil can bring truth, but it can be very useful. Helping us find the relative truths we're looking for. We're betting on the capacity LLMs have in helping us navigate the sea of information we're swimming in.

Any clients that have a "share" via njump, perhaps a setting can be used to supply the specific instance of njump you want to use.

Some clients do that for commenting. Never mandatory though

Though, when you do, flush all the influencers without anything new to same with nostrGPT 🤩

Entertain them to death or just mimic their posting patterns where they leave.

Play adversarial games with with normal users. Continually train, lowering perplexity globally (in theory) and incentivize everyone to stop writing like bots 😜

https://wikifreedia.xyz/social-media-enthropy/

nostr:naddr1qvzqqqrcvgpzphtxf40yq9jr82xdd8cqtts5szqyx5tcndvaukhsvfmduetr85ceqyw8wumn8ghj7argv43kjarpv3jkctnwdaehgu339e3k7mf0qq2hxmmrd9skcttdv4jxjcfdv4h8g6rjdac8jghqq28

> "surprise" factor is in someone's social media content. That is, how easy is it to predict the next thing that they will produce, based upon the past things that they have produced.

LLMs have a perplexity metric for its inputs. Higher perplexity equals higher "surprise" of the input with respect to its trained corpus of text.

Train nostrGPT and look at the average perplexity score/person.

Feasable, but quite impractical without a heafty budget i'd guess.

Gzip all their notes and then compare the size ratio 😆