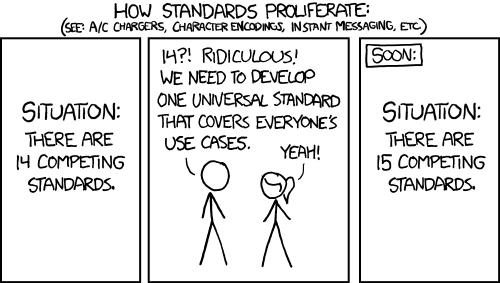

Why do we keep re-implementing the p2p/internet alternative and failing to get it right? I'm taking my time at the airport to read about pear, arweave, freenet, gnunet, threefold, 384, i2p, tor, xmpp, p2panda, etc. It seems like the basic problem is not that hard, but it's complex enough that people can have an infinite number of opinions about how to approach it, and because we're all grumpy dissidents here we can't compromise and get along. i2p for example has an awful UI, which could be largely fixed if a single developer put some time into cleaning it up. People have been trying to fix the internet for as long as I've been alive, using many of the same techniques. Why is it not fixed?

Discussion

I'm with you. Was recently reviewing Urbit again and I think I'm starting to understand it more. You may be interested in it as well.

I'm really fond of their PKI or Public Key Infrastructure

I looked at urbit a few years ago. Definitely in this list. The complexity and immaturity of applications is what sent me to nostr. But I haven't written it off.

Urbit interests me because it is the only tech project ever made that has simplicity as part of the core design. Over time, it will only get more simple as well.

It’s just a fundamentally different ethos from anything else out there. Difficult to compare

I guess complexity was the wrong word, because you're right, every layer is very simple. Maybe complicated? But the thing that really turned me off was not knowing if the purely functional paradigm could remain performant at a low level. Evidently not, because they bail out to C code as an optimization. Like any foundationalist paradigm, if a single stone crumbles it casts the rest of the project into doubt.

"the pure function" always comes at the cost of infinite copying of data

just watch a haskell compilation sometimes with a system monitor open to see just how much memory it uses (and then discards)

this is why #golang uses a lot of functional concepts but discards forcing you to avoid pointers, and it's a delicate compromise because pure functions are without race conditions and this is another goal of Go - enabling concurrent processing

for good reason if certain operations happen concurrently on a single piece of data the go runtime kills everything and i am glad they make it that way because races are the most elusive bugs there is

Urbit is a totally new Operating System. So naturally, it will be one step forward, two steps back in terms of performance.

Having said that though:

- Nock can be run on bare metal. The only reason we use C today is bc it’s not running on bare metal. So they don’t “bail out” to C for performance, they just use C as the translation from Nock to machine code if that makes sense. https://x.com/rovnys/status/1766183255097905157?s=46

- They have recently achieved 1 GB / second read speeds of data https://x.com/hastuc_dibtux/status/1765075518637154374?s=46

A stone crumbling is not possible in this project because of the way it was designed. They designed the whole system together as a whole and then worried about performance later. Over time, one team has found a slightly more performant way to design the system, but they are still following the same design. It’s called Plunder. https://x.com/sol_plunder/status/1704554384930058537?s=46

The ethos comment is key. Hard to describe but Urbit is a calmer place to be. Feels more human friendly. Made because things are harder there it’s built in proof of work? It’s a long term project and worthy of the effort. It’s a plant trees for the next generation situation.

*maybe (oh you can delete are fix spelling errors there to….lol)

I stopped using umbrel's containers when they made urbit available (I update manually now).

Urbit seems like shitcoin garbage

At least it's modular shitcoin garbage 👍

Urbit has a native bitcoin wallet. Repeat: a team made a bitcoin wallet with Hoon. And soon an urbit native lightning wallet will be released as well.

The goal is to move the address space off of ethereum and onto an urbit native chain as well. NFTs have very few use cases, but it turns out that one of them is digital land deeds.

What the fuck is a digital land deed?

There is a limited amount of address space in urbit. So it is analogous to land in that sense. You just need something permanent that says this address belongs to this person. There are ways that they can move off of ethereum and use something natively urbit for that. Ethereum was just used to bootstrap it

You're retarded 🤷

There is absolutely no limit to the address space in these shitcoin projects. Call them digital land deeds, pets, or bananas or whatever, put them on whatever chain (including Bitcoin) doesn't change anything.

Absolutely nothing interesting about urbit 😤 Arbitrary ownership schemes have little to do with Bitcoin, this repeated grift has been done many times

Urbit is different from nfts, have you read their docs?

I have, it's garbage written for non-technical people who likely cant differentiate between hosted cloud services vs virtual machines 😅 the grift takes the confusion and expands upon it.

"Stars", "Planets" and "Galaxies" are basically used to describe paying for someone's Amazon instance running a repo filled with random selections from the Awesome-Selfhosted GitHub, then host that (in most cases, free) software in a VM one must pay for with KYC tokens (etheiruym)🫠

Tldr; it's an attempt to re-sell shitty cloud infrastructure using space terms

Not correct. Easy for you to boot up a comet and explore the ecosystem. Reading and doing aren’t the same. Urbit is a long term project and a worthy one.

Then why did they build it on ethereium?🫠

Also not a fan of ethereium either, I get it. I’m not a dev, but practically speaking… it is only used to obtain and reboot(if needed) your unique id. Using L2 you dont even need a wallet. Otherwise you don’t have interact with it. Not an expert on the history, but my understanding is when Urbit was built 10ish years ago it was the best blockchain tool available at the time. It was a pragmatic choice. And for whatever flaws of that blockchain choice, at the community level it does seem to practically help prevent spam and bot problems. Thus, P2P support and trust appears much higher than here (in my several weeks of nostr exposure anyway). I’m here to learn about nostr. There are some advantages here, but my main suggestion if one cares about learnings in the P2P space it would leave a gap in knowledge to gloss over Urbit casually.

nostr isn't p2p, it is just clients and relays. Consider checking out Keet rather than wasting your time (and money) with Urbit, its a p2p messaging/conference application that leverages DHT to make connections, no tokens necessary.

Generally ETH L2s make no sense because ethyrium's entire existence comes from the idea that bitcoin can't scale in layers due to its rigid limitations therefore it was determined that other "chains" to support applications and other usecases must be needed. The term "ETH L2" is an admission that ETH is pointless, yet another half-ass attempt at a narrative shift. It would do you well to avoid anything that uses nonsense jargon like that.

It's not built on Ethereum. They're just using it to associate a Galaxy, Star, and Planet to a unique public key (in this case an Ethereum address). Those aren't just "tiers of cloud hosting," they're uniquely identifiable points that facilitates P2P comms (peer discovery and routing) inside the network.

It's annoying that they've built their PKI on Ethereum. I don't like it as well. If it's possible to move it on Bitcoin I'll be one of the first people to advocate for it.

Urbit is a worthy piece of tech in itself and it's merits shouldn't be understated just because its public key infrastructure is on Ethereum.

Urbit massively simplifies the entire dance of spinning up a VM, installing n+1 packages, and running n+1 Docker containers. It's literally a VM that you can run anywhere that has its entire communications and publishing stack built-in.

You should really read the overview before saying anything about it. It just makes you look massively uninformed.

🙂↔️

I think it would benefit you if you read a little bit more about it. 🙂

That question resonates a looot.

Thanks for listing them btw. Got some new ones to check out.

Is there some kind of comparative analysis somewhere that you know of?

I've always been a fan of freenet, I think it's an extremely elegant system. The problem is potentially storing and forwarding data you vehemently disagree with as a user.

(Same issue occurs with relays in nostr, but at least that's opt-in)

Is there somewhere I can go to understand it? I've talked to Ian Clarke on multiple occasions (most recently yesterday, which sent me down this rabbit hole), but he does a terrible job of explaining what it would look like in practice.

What do you mean in practice? The network exists and there's several social media platforms built on it.

"twitter like"

Last I checked up on it, it had no database persistence so that really limited its usefulness imho

Probably the oldest, it's essentially a forum system.

Both operate on the concept of following other ids, and web of trust. Happy to elaborate/clarify, I'm not sure how deeply to explain it.

There was a very interesting WoT plugin that was intended to undergird future applications, but I don't know if it ever reached completion: https://github.com/hyphanet/plugin-WebOfTrust it was iirc academically interesting if nothing else for web-of-trust with transitive trust.

Rambling that is probably redundant but I don't know how much you've read so far:

The whole system is built around recursively searching the network for different keys: content keys and signed keys (keys as in key-value store).

Content keys are obviously derived from the content of their data, and signed keys are derived from the signature. Layered on top of signed keys are "updatable signed keys" that are actually just a convention for retrieving the latest version of a signed key by searching subsequent versions (in fact they are different keys with a -1, -2, ... -N suffix)

FMS and Sone both work by "following" an identity's updatable key, which will be updated with an index of their latest posts, and possibly profile data (I forgot exactly how each works, but they're slightly different, and incompatible with each other)

When you make a request for a particular key, intermediate nodes on the route to where that key *should* end up will keep an LRU of requests, and if the key ends up being crated while they have retained that request, they'll forward the result, and hopefully it will end up being sent back to your node in very short order like a notification (otherwise your node will periodically poll and should see it later)

so, just to be clear, most of those concepts are repeated in the nostr protocol

event ID = content hash

signature = on content hash

user identity = public key

scanning the web of trust = #coracle

haha lol! on of the few, just had to put that in at the end

oh yeah one big difference

they used RSA, we use EC

the signature data on such old systems was unweildy... most notes are barely 5% of the cost of the signature and keys

and i should add that in theory we could probably not lose a lot of security shaving another 16 bytes off signatures

it has got me thinking about the idea of making a really raw, simplified version of nostr

like, imagine there is just events, created_at, with content, and a kind, no tags

tags could be subsumed into NIP-32 labels, so you can add them concurrently by clients instead of bundling them together with the event

make the encoding binary, so that all events are a uniform format, the data (content), then the hash of the data, then the signature on the data, everything in binary, no escaping, only a varint length prefix on variable length fields (ie content, everything else is fixed)

you could shrink down the signature field to 48 bytes because it's really unlikely that such a massive data set it's worth trying to mess around with forging signatures, events fly past a a million miles an hour, so a 1/4 reduction in signature security is probably not that big a deal because most of the time it is worth nothing, and this protocol doesn't certify monetary transactions, so it doesn't need to be as strong, and it needs low bandwidth more than it needs such high security as required for money

the real reason why json was used as the message encoding for nostr in the first place was the inventor was a python/django (probably) dev who was used to using this as a message format, as most web apps use json as a message format, as they natively decode it

and the reason why tags are in the event are because hashtags and to reduce the complexity of searching for hashtags for a twitter like experience

these are not necessary to the fundamentals

i'm gonna start campaigning to strip down the event format, remove tags and propose a compact 48 byte schnorr signature and a simplified binary encoding that will at minimum halve the bandwidth cost of nostr

and probably come up with a way of making a protocol translator that securely bridges between a more simple binary format and the source events so that we can start to move forward with a much simpler strategy of building this thing...

it's just thought at this point, this will eventually metastasise into awhole thing

i can think of no better reason to stack sats than to be able to participate in building a really good social network protocol, nothing makes me more excited than making information flow uncontrollable

Nice! That's helpful, I'll look into those projects. Ian always talks in such an abstract way about contracts and delegates it's hard to see how that bridges to content storage and discovery.

There's also a channel on irc, though even back in the early 2010s it was pretty dead. They recently rebranded to hyphanet so it's probably #hyphanet on libera.chat

Good question, thanks for sharing.

No answer from me either.

I don't know the technology, but it's because this world is hell. It's usually broken.

cuz muh hierarchy is bad mmmmk....

Maybe just bad branding?

Joking. I don’t know. I’ve never really heard of any of those things. Maybe that’s the problem? 😆

That's probably a big part of it. Or marketing in general, the target audience for tech-as-tech is tiny. But clearly if you provide real value using that tech it can have wider appeal.

Usually people rarely care about the tech. They just want a solution. It’s possible that the tech you mentioned is fine and could work if enough people cared, but like I said I don’t know anything about it. From my own experience with niche tech stuff - it’s very easy to learn about and ignore because it feels too technical and it’s not exactly clear why I should care when this other more prominent thing exists, it works and it’s easy.

💯 With consumers, the tech is almost completely irrelevant as long as the applications built on it are fluid and functional. For devs, the tech is interesting and important, but speed of iteration and onboarding seem to be most important to win out in the end. #Nostr checks off the developer box and is making a lot of progress on the consumer side.

Its not the marketing problem. It is the incentives problem. Everyone that needs p2p protocols build them for just themselves, innovate in isolation. We have had many such innovations that cannot be understood or reused. Especially open source softwares.

Instead we need a front. A coordination between researchers specifically researching this topic. This research group's main goal would be to innovate, document and educate. This group should make money by educating. Do not go pay $100k and study in MIT, instead pay significantly less and learn from the real builders, ask them why they did what they did, their train of thoughts.

well, i think nostr comes pretty close to nailing the right way to do it, simplify the API

Bitcoin is p2p, the final frontier.

Depends on what you're trying to achieve. P2P for apps is possible only if your ISP allows it and most don't. Someone ends up having to run a turn server which defeats a lot of the benefits of p2p such as not requiring a server, and latency/bandwidth not having to hop through multiple hops. Saturating the few turn servers..

Anyway, yeah p2p is cool. It's not that solutions don't exist, it's that everyone uses their phones and lacks basic network skills in the US. People from oppressive regimes are networking geniuses in comparison because they had to learn it or risk the deth penalty.

1. Inertia.

2. "They" don't want it to be fixed, since it was designed this way.

3. Not enough people care. Yet.

The problem, IMHO, boils down to adoption.

Back in the day p2p file sharing REALLY worked out.

Why? Because LOTS of people were using it.

IF we had a mass adoption of Meshtastic for example, we would NEVER have to pay ISP or big telco in order to send messages or communicate.

But, will we have mass adoption of Meshtastic? Maybe, maybe not, who knows???

Nostr on the other hand have an enough low-entry-barrier that makes me believe that yes, Nostr may reach critical mass and be the p2p flag carrier of the decade... And THIS is why I'm around! =)))

nostr:note1t8l8wqat36kt4j09zmfdpsk4a6mju69h6dk56d5r8twr4vyh68kssuam2v

can you list the things which are wrong with the internet? seems fine to me.

1. BitTorrent is missing from that list, and

2. I think the reason it's not fixed is because for a network of users a new and better solution needs to be 10x better than the existing one to overcome the network effect of the old tech. IPv6 is a great example: even with today's challenges with IPv4, and v6 being widely available for >20 years, adoption is still quite marginal

Because it is undesirable. It is very easy to open a port and relatively easy to get dynamic dns account.

So everything that p2p offers is easy to replace by normal Internet Protocol.

But it is impossible to make a scalable network with good UX when every user has to be a devops engineer.

Discovery and search are also physically impossible to do in p2p, beyond simple distributed hash maps. You can't do distributed Google, or distributed notifications or distributed counting of likes or distributed threads. All of these need centralization.

For 1-on-1 stuff like private chat rooms, things are much simpler, but again, you need every user to have their own always online server. That simply won't happen. Civilization is entirely about division of labor. People can't do everything on their own.

They solved it on Silicon Valley cmon yall the blueprint is there.

Labor != Free

Pay devs