#asknostr among the problems that Nostr faces, the child porn problem is a very, very, very bad problem.

A VERY bad problem.

What is the current thinking among developers about how to deal with this?

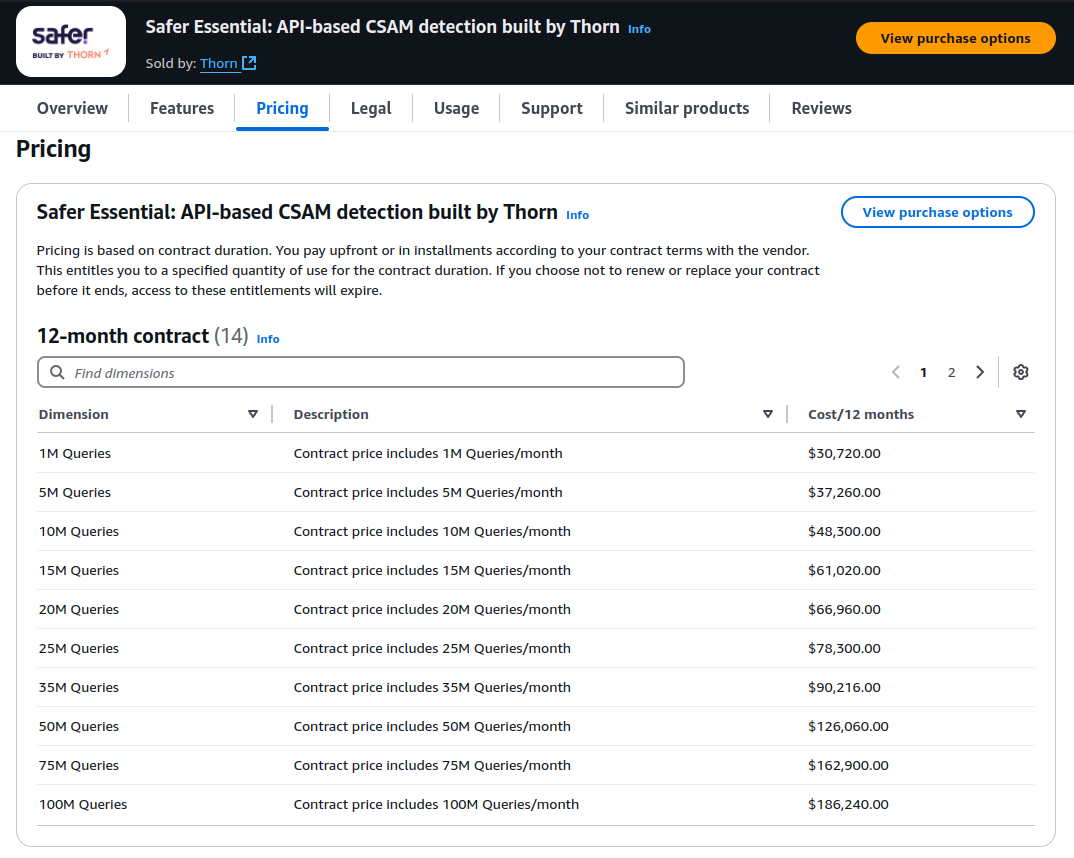

Nobody likes censorship, but the only solution I can think of (SO FAR) is running an image identification service that labels dangerous stuff like this, and then broadcasts a list of (images, notes, users?) who are scoring high on the "oh shit this is child porn" metric. Typically these systems just output a float between zero and 1, which is the score....

Is anyone working on this currently?

I have a good deal of experience of running ML services like image identification at scale, so this could be something interesting to work on for the community. (I also have a lot GPU power, and anyway, if you do it right, this actually doesn't take a ton of GPUs to do even for millions of images per day....)

It would seem straightforward to subscribe to all the nostr image uploaders, generate a score with 100 being "definite child porn" and 1 being "not child porn", and then broadcast maybe events of some kind to relays with this "opinion" about the image/media?

Maybe someone from the major clients like nostr:npub1yzvxlwp7wawed5vgefwfmugvumtp8c8t0etk3g8sky4n0ndvyxesnxrf8q or #coracle or nostr:npub12vkcxr0luzwp8e673v29eqjhrr7p9vqq8asav85swaepclllj09sylpugg or nostr:npub18m76awca3y37hkvuneavuw6pjj4525fw90necxmadrvjg0sdy6qsngq955 has a suggestion on how this should be done.

One way or another, this has to be done. 99.99% percent of normies, the first time they see child porn on #nostr ... if they see it once, they'll never come back.....

Is there an appropriate NIP to look at? nostr:npub180cvv07tjdrrgpa0j7j7tmnyl2yr6yr7l8j4s3evf6u64th6gkwsyjh6w6 ? nostr:npub1l2vyh47mk2p0qlsku7hg0vn29faehy9hy34ygaclpn66ukqp3afqutajft ? nostr:npub16c0nh3dnadzqpm76uctf5hqhe2lny344zsmpm6feee9p5rdxaa9q586nvr ?