Well, maybe I misunderstand what you're saying 🫂

"They are very robust search engines, nothing more."

"[...] There is nothing impressive with any of these. Clippy 2.0 at best."

Explaining why "I’d argue people are the same in the ways that matter for short term economic productivity" is relevant:

When I read the above quotes, to me it seems like you're saying that AI is unlikely to have much more effect on maybe the economy and wages than clipboard managers like clippy.

But I'm point out that many people are currently mostly getting paid for doing exactly what you say this is limited to: "finding, analyzing, and relaying relevant information" And if we can get it to do that for robotic movement planning then again many more people are getting paid for something this machine is doing. Machines are likely to get better, and outcompete people.

If that's true then it's not "nothing more". 🫂

I am saying nothing about its effects on the economy or how it may affect jobs. Im strictly speaking of the technology itself, and I fail to be impressed with it. Got one on my embassy server and I really dont see all the fuss. As it currently stands, they are truly nothing more than very robust search engines. What they may or may not be in the future is open for speculation, but its still just code and silicon, neither of which have 'intelligence'. They can only do what a human programmed them to do.

What model(s) are you using?

I don’t know if it’s important but it seems like it’s important to stress that it’s not a search engine at all. Maybe there are times when they are useful as a search engine, but mostly it’s a thinking engine.

And when i use it as a search engine, I think of it as almost the opposite of robust - I think it behaves more like one person‘s opinion, rather than a summary of what humanity is saying about a topic.

Totally agree that the future is speculation, but also projecting that past trends in capability improvements will continue (especially as it gets exponentially more funding and attention) doesn’t seem too crazy.

One thing search engines can’t do is think with you about something that’s never been asked before. These can.

Its kinda is a search engine on steroids. It searches data and returns a response. And it can only return results based on data it has access too. Just because the user end gives detailed answers doesnt mean that the backend really does much more than a search engine. Just a much more complex algorithm. As for what model I was trying, not exactly sure. Something with dolphin in the name maybe. Its one that is available with the GPT package that is available for embassy servers

Thread collapsed

Ok its called FreeGPT and the specific model is Dolphin-Llama2-7B

If that’s the only one that you have messed around with and maybe that could be part of the overpromising vibe you’ve gotten. Llama2-7B is quite a bit less capable than the full llama2 model. And that model is quite a bit less capable than ChatGPT4. Token generation on your embassy is probably a lot slower than what I’m used to using open AI servers.. though self hosted is awesome. I want to get some hardware to do that myself someday.

I've seen other people's results from the newest before I even touched one myself. Like I said, Im not shitting in it. Only shitting in the blown way out of proportion claims about em. There's nothing magical (for lack of a better term) about em. They do what they do, but there is nothing that can reasonably or rationably be called intelligent about em. When one answers a long sought after question that no human has been able to, then maybe I'll change my tune, but as it currently stands, its just searching data and returning the most likely desired results.

Thread collapsed

Oh as for the self hosting, embassy can run on any pc with the proper specs or RasPi so if ya got an old pc or laptop laying around, it may work

Thread collapsed

Thread collapsed

Thread collapsed

Thread collapsed

Thread collapsed

One thing to keep in mind. Tech R&D these days is mostly lofty promises and propaganda. Gotta keep funding flowing in, so all these claims are made of this or that is right around the corner. Think along the lines of Elon promising every year that full autonomous driving will be ready 'next year'. Its all for fund raising.

That may be true, but if you compare text generation from today to 4 years ago, it’s exponentially better today. Self driving isn’t really like that, and even it has improved a lot in the last 10

I can agree with that. All Im really saying is people tend to greatly exaggerate and/or overestimate what LLMs are and what they do. Im not really shitting on the technology in and of itself. Its good at what it does. But its just hyped up beyond what it is.

Thread collapsed

Thread collapsed

Thread collapsed

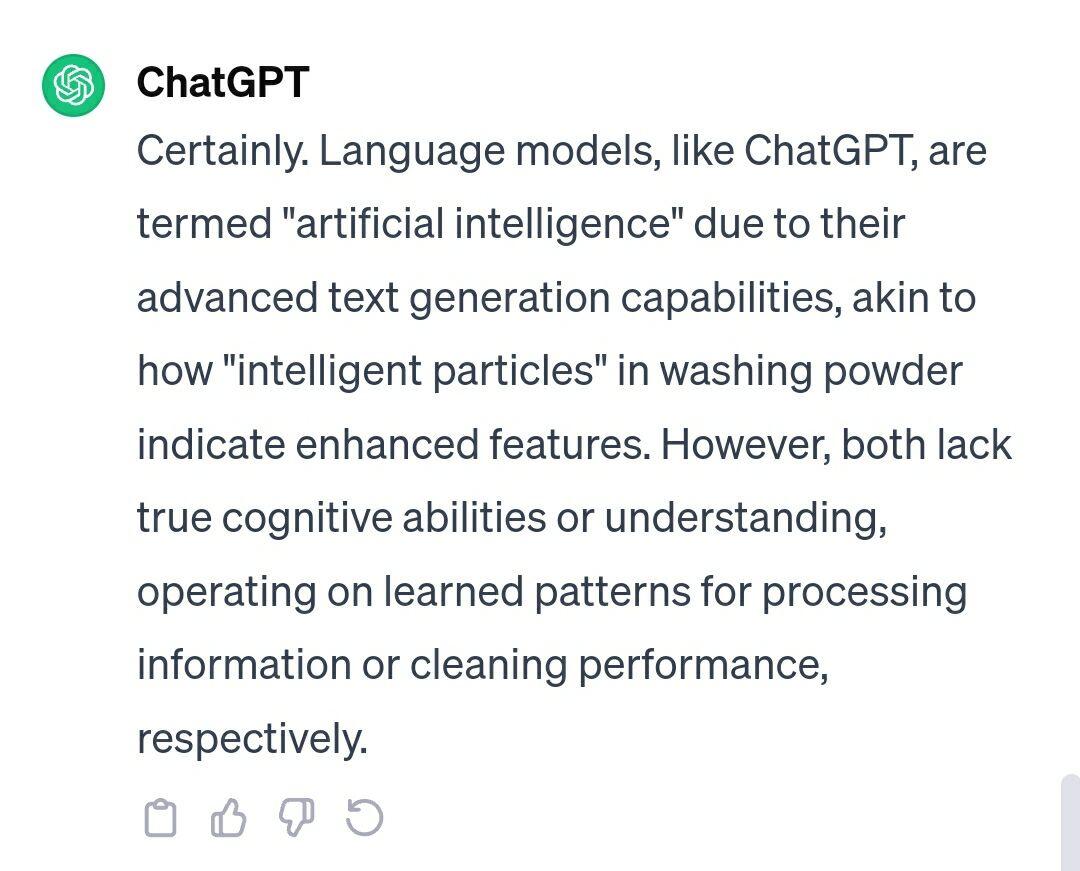

#m=image%2Fjpeg&dim=1080x871&blurhash=%236SF%40TIU_3xu_3x%5D%25Mt7WBIUS1WnNGbGRjt7azof%7EqIn9ZbaM%7Bt7R%25fiWBkCNGWBR%25WUkCkBj%5Bay-%3BfkIUkBR%25WUWBayj%5B%25MR*Rjj%5BayofayWBj%5Bxut7ofWBWBWBWCj%5Bay&x=c7a39db8c99b4cbafe023cc26c41b1c5e775a182e671f6b4a9e47e1f30dc5faa

#m=image%2Fjpeg&dim=1080x871&blurhash=%236SF%40TIU_3xu_3x%5D%25Mt7WBIUS1WnNGbGRjt7azof%7EqIn9ZbaM%7Bt7R%25fiWBkCNGWBR%25WUkCkBj%5Bay-%3BfkIUkBR%25WUWBayj%5B%25MR*Rjj%5BayofayWBj%5Bxut7ofWBWBWBWCj%5Bay&x=c7a39db8c99b4cbafe023cc26c41b1c5e775a182e671f6b4a9e47e1f30dc5faa